Parametric Problems#

Many systems depend on independent parameters that describe material properties or other physical characteristics of the phenomenon being modeled. In such cases, the operators of a reduced-order model (ROM) should be designed to vary with the system parameters. This tutorial demonstrates how to construct and evaluate a parametric ROM through an elementary example.

Problem Statement#

We consider a problem with a single scalar system parameter \(\mu > 0\).

Governing Equations

Let \(\Omega = [0,L]\subset \RR\) be the spatial domain indicated by the variable \(x\), and let \([0,T]\subset\RR\) be the time domain with variable \(t\). We consider the one-dimensional heat equation with constant non-homogeneous Dirichlet boundary conditions,

where the constant \(\mu > 0\) is a thermal diffusivity parameter, \(\alpha>0\) is constant, and \(q(x,t;\mu)\) is the unknown state variable. This is a model for a one-dimensional rod conducting heat with a fixed initial heat profile. The temperature at the ends of the rod are fixed, but heat is allowed to diffuse through the rod and flow out at the ends of the domain.

Objective

Construct a reduced-order model (ROM) which can be solved rapidly to produce approximate solutions \(q(x, t; \mu)\) to the partial differential equation given above for various choices of the diffusivity parameter \(\mu > 0\). We will observe data for a few values of \(\mu\), then use the ROM to predict the solution for the entire time domain \([0, T]\) and for new values of \(\mu\). Hence, the ROM will be predictive in the parameter \(\mu\).

import numpy as np

import scipy.sparse

import matplotlib.pyplot as plt

import opinf

opinf.utils.mpl_config()

Full-order Model Definition#

We consider the parameter domain \(\mathcal{P} = [.1,10]\subset\RR\). A finite element or finite difference discretization leads to a system of differential equations,

where \(\q:\RR\times\mathcal{P}\to\RR^n,\) \(\c:\mathcal{P}\to\RR^n,\) and \(\A:\mathcal{P}\to\RR^{n\times n}.\) This is the full-order model (FOM). The constant term \(\c(\mu)\) arises due to the nonzero boundary conditions. In this case, the parametric dependence on \(\mu\) is linear: there are \(\c^{(0)}\in\RR^{n}\) and \(\A^{(0)}\in\RR^{n\times n}\) such that \(\c(\mu) = \mu\c^{(0)}\) and \(\A(\mu) = \mu\A^{(0)}.\)

Discretization details

We take an equidistant grid \(\{x_i\}_{i=0}^{n+1} \subset \Omega\),

The boundary conditions prescribe \(q(x_0,t;\mu) = q(x_{n+1},t;\mu) = 1\). Our goal is to compute \(q(x,t)\) at the interior spatial points \(x_{1},x_{2},\ldots,x_{n}\) for various \(t\in[0,T]\), so we consider the state vector \(\q(t;\mu) = [~q(x_{1}, t;\mu)~\cdots~q(x_{n}, t;\mu)~]\trp\in\RR^n\) and derive a system governing the evolution of \(\q(t;\mu)\) in time.

Approximating the spatial derivative with a central finite difference approximation,

and using the boundary conditions \(q(0,t;\mu) = q(L,t;\mu) = 1\), we arrive at the following matrices for the FOM.

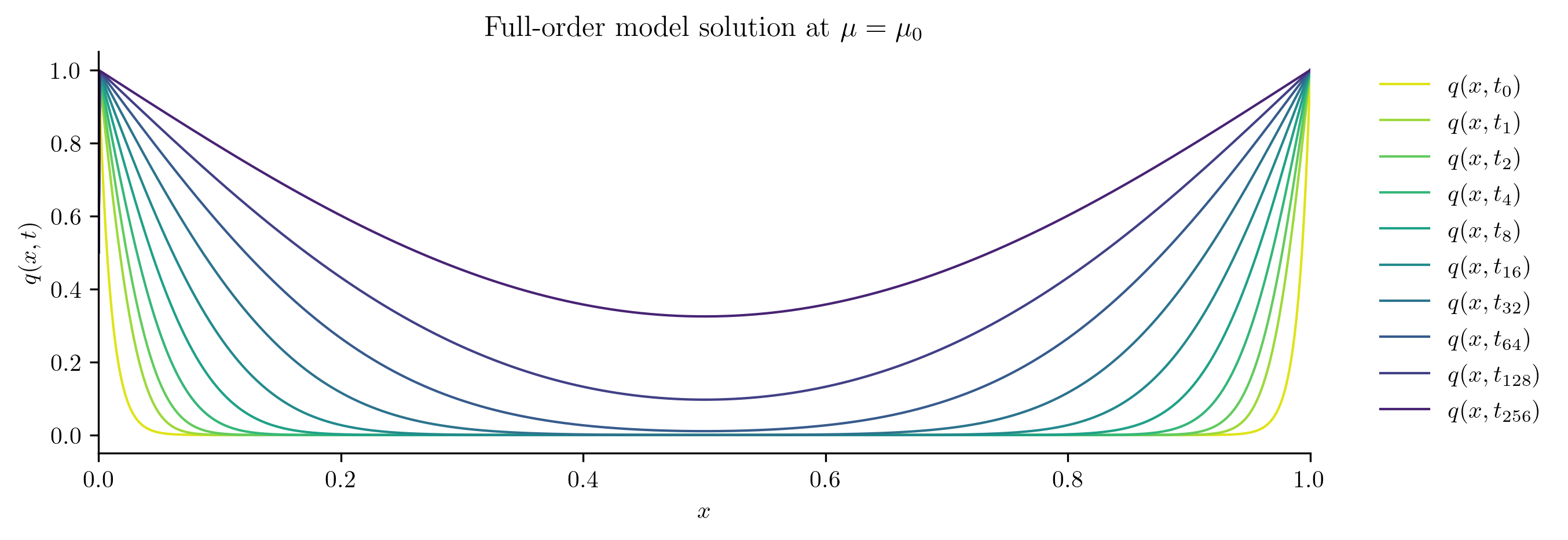

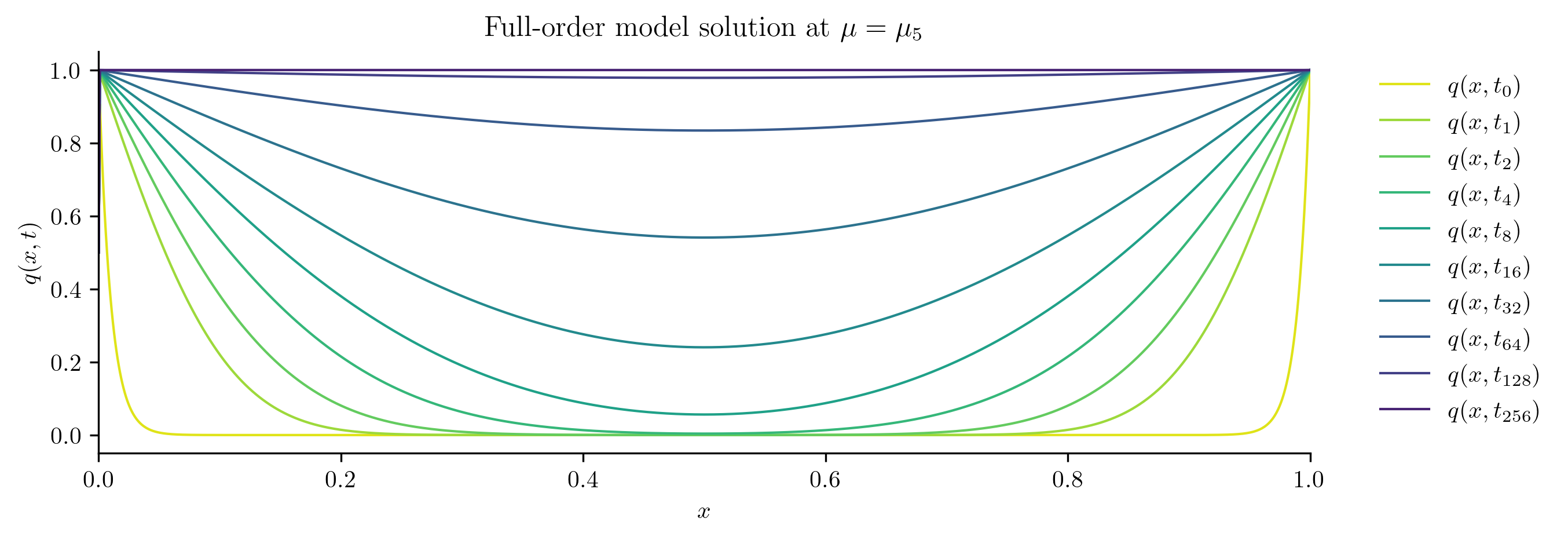

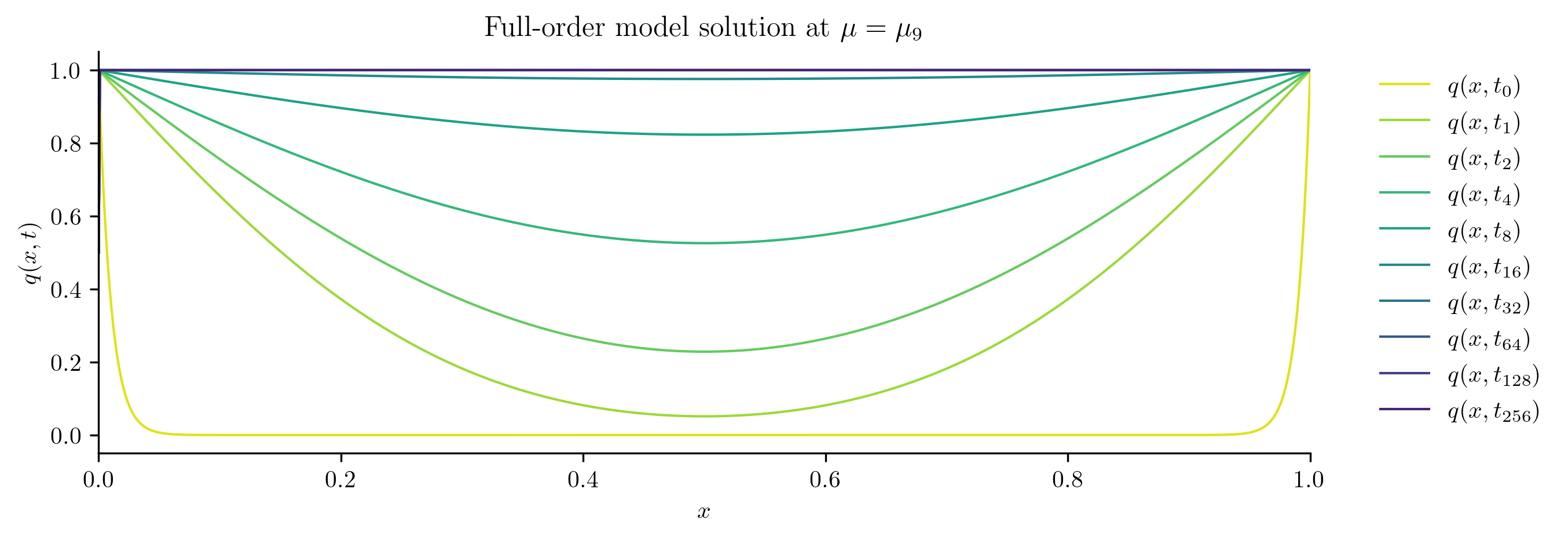

Training Data Generation#

Let \(L = 1\), \(T = 1\), and set \(\alpha = 100\). For this demo, we use \(n = 2^{10} - 1 = 1023\) spatial degrees of freedom and record the FOM solution every \(\delta t = 0.0025\) time units. For each training parameter \(\mu_i\), this results in \(k = 401\) state snapshots, organized in snapshot matrices

# Get s logarithmically spaced paraneter values in D = [.1, 10].

s = 10

training_parameters = np.logspace(-1, 1, s)

print(training_parameters)

[ 0.1 0.16681005 0.27825594 0.46415888 0.77426368 1.29154967

2.15443469 3.59381366 5.9948425 10. ]

Full-order solves...done in 3.85 s.

Spatial domain: x.shape=(1023,)

Spatial step size: dx=0.0009765625

Full time domain: t_all.shape=(401,)

Temporal step size: dt=0.002500

Full-order matrix A0: A0.shape=(1023, 1023)

Full-order vector c0: c0.shape=(1023,)

Initial condition: q0.shape=(1023,)

Training snapshots: Qs[0].shape=(1023, 401)

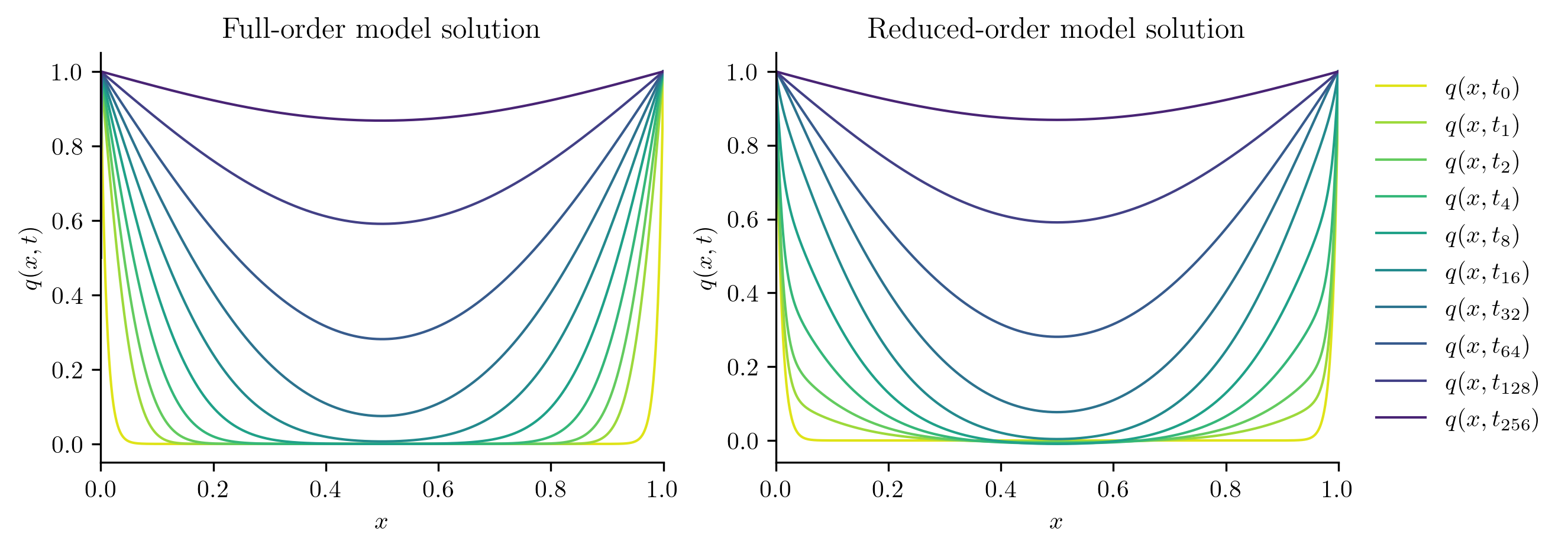

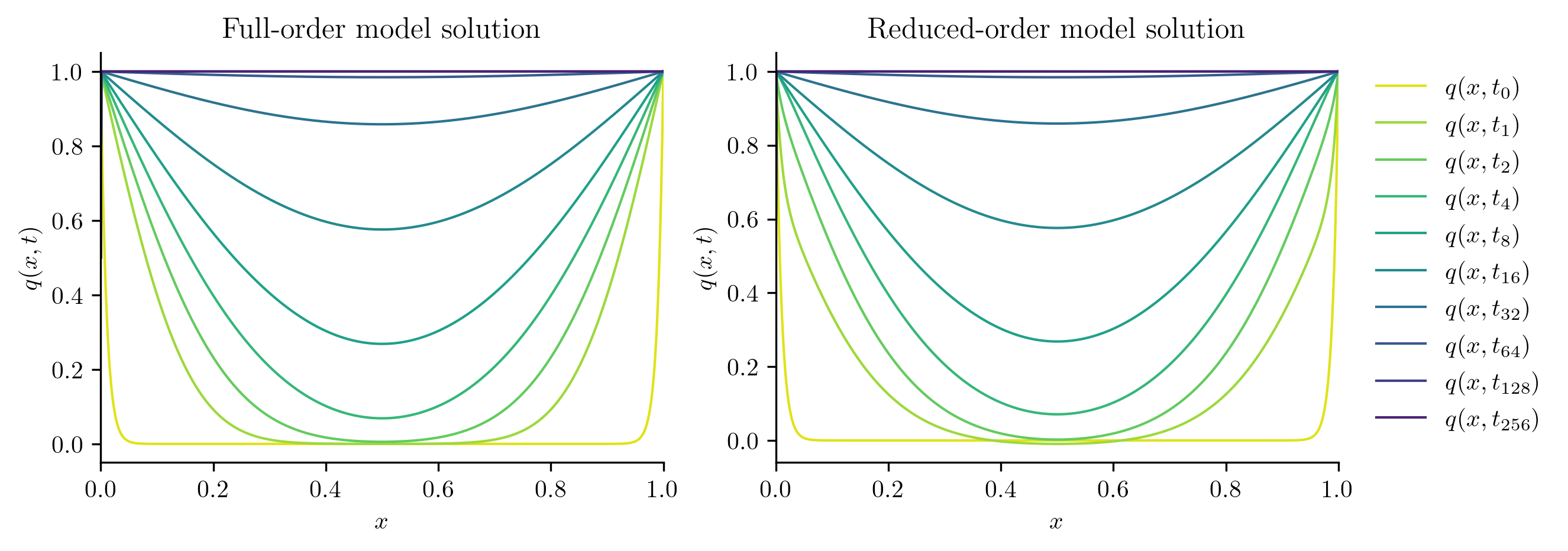

for i in [0, s // 2, s - 1]:

plot_data_space(Qs[i], rf"Full-order model solution at $\mu = \mu_{i}$")

Reduced-order Model Construction#

Now that we have parameter and snapshot data, we instantiate a opinf.roms.ParametricROM and pass the training parameter values and the corresponding state snapshots to the fit() method.

We will use a opinf.basis.PODBasis to reduce the dimension of the snapshot training data, which approximates the discretized state vector as \(\q(t;\mu) \approx \Vr\qhat(t;\mu)\) for some \(\Vr\in\RR^{n\times r}\) with orthonormal columns and \(\qhat(t)\in\RR^{r}\), with and \(r\ll n\).

Based on the FOM (9), we specify a ROM with the following structure:

where \(\chat^{(0)}\in\RR^{r}\) and \(\Ahat^{(0)}\in\RR^{r\times r}.\)

Data for the time derivative \(\ddt\qhat(t)\) are estimated in this example with sixth-order finite differences using opinf.ddt.UniformFiniteDifferencer.

The underlying least-squares problem to determine \(\chat^{(0)}\) and \(\Ahat^{(0)}\) is given by

where \(\qhat_{i,j} = \qhat(t_j;\mu_i)\in\RR^{r}\) are the state snapshots and \(\dot{\qhat}_{i,j} \approx \ddt\qhat(t;\mu_{i})|_{t=t_j}\in\RR^{r}\) are the estimated time derivatives.

Preserving Parametric Structure

An OpInf ROM should have the same structure as an intrusive Galerkin ROM. The Galerkin ROM for (9) is derived by substituting in the approximation \(\q(t;\mu)\approx\Vr\qhat(t;\mu)\), yielding

Next, left multiply by \(\Vr\trp\) and use the fact that \(\Vr\trp\Vr = \I\) to get the following:

where \(\tilde{\c}(\mu) = \Vr\trp\c(\mu)\in\RR^{r}\) and \(\tilde{\A}(\mu) = \Vr\trp\A(\mu)\Vr \in \RR^{r\times r}.\) Finally, using the formulae \(\c(\mu) = \mu\c^{(0)}\) and \(\A(\mu) = \mu\A^{(0)}\), we can further simplify to

Interpolatory and Affine Parameterizations

In this problem, the dependence on \(\mu\) in the ROM operators \(\chat(\mu)\) and \(\Ahat(\mu)\) is known from because the structure from the FOM is preserved by linear projection (see affine operators). If the dependence on \(\mu\) is not known a-priori or cannot be written in an affine form, interpolatory operators sometimes provide a feasible alternative.

rom = opinf.ParametricROM(

basis=opinf.basis.PODBasis(projection_error=1e-6),

ddt_estimator=opinf.ddt.UniformFiniteDifferencer(t_all, "ord6"),

model=opinf.models.ParametricContinuousModel(

operators=[

opinf.operators.AffineConstantOperator(1),

opinf.operators.AffineLinearOperator(1),

],

solver=opinf.lstsq.L2Solver(1e-6),

),

).fit(training_parameters, Qs)

Reduced-order Model Evaluation#

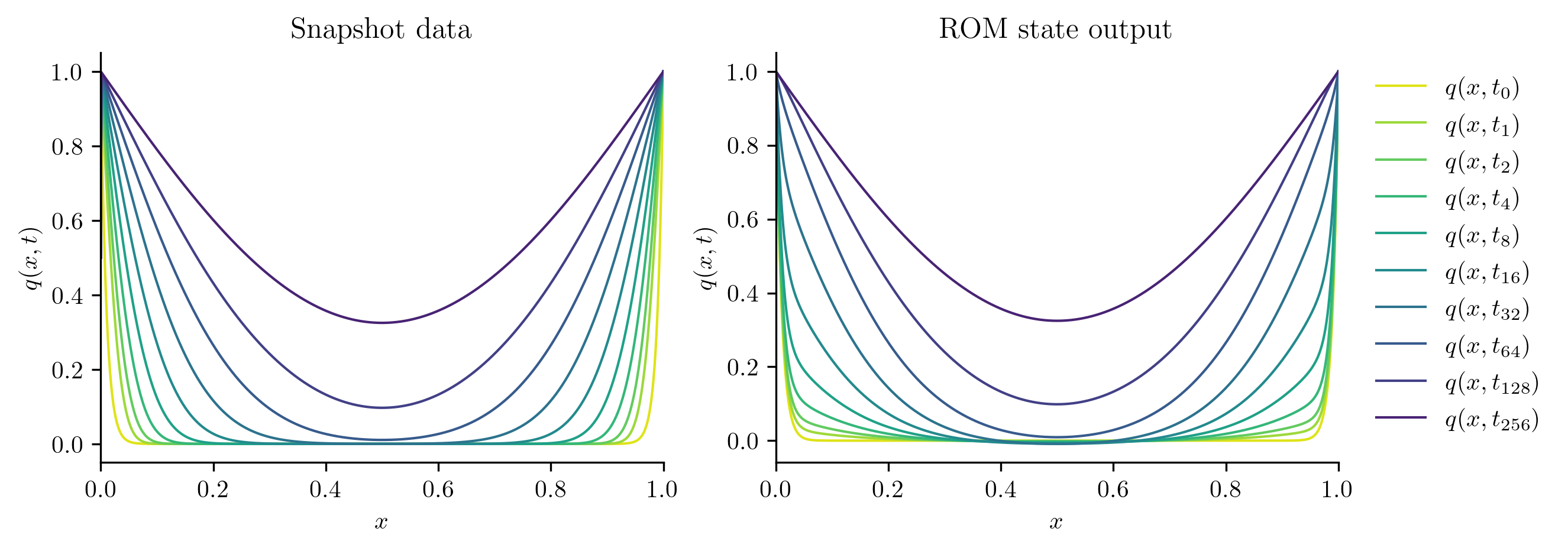

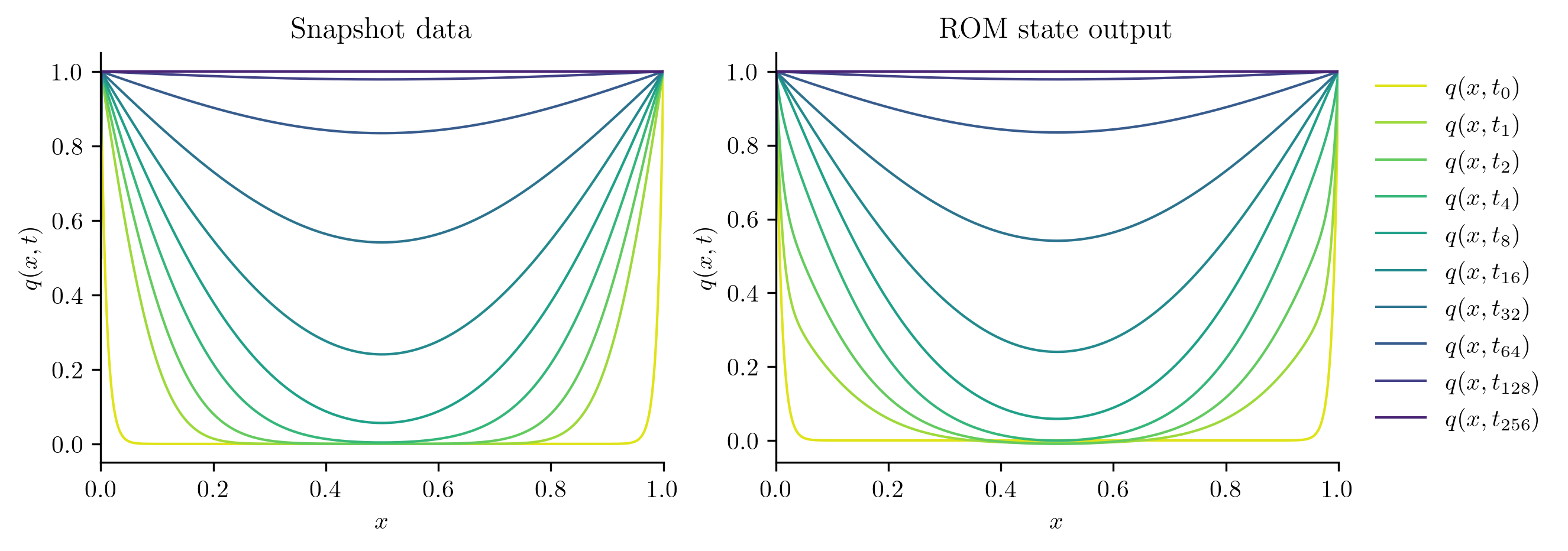

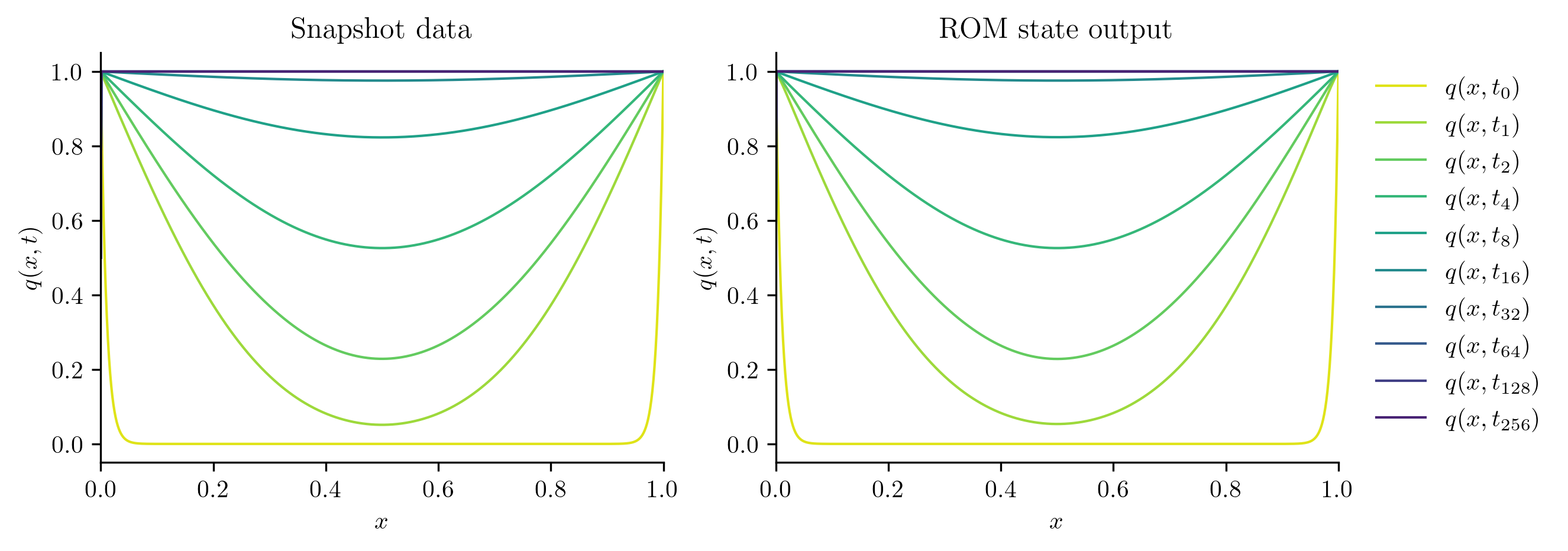

We start by checking comparing the solutions of the ROM at the training parameter values to the training snapshots.

for i in [0, s // 2, s - 1]:

with opinf.utils.TimedBlock("Reduced-order solve"):

Q_ROM = rom.predict(training_parameters[i], q0, t_all, method="BDF")

plot_two_datasets(Qs[i], "Snapshot data", Q_ROM, "ROM state output")

plt.show()

Reduced-order solve...done in 0.01 s.

Reduced-order solve...done in 0.01 s.

Reduced-order solve...done in 0.01 s.

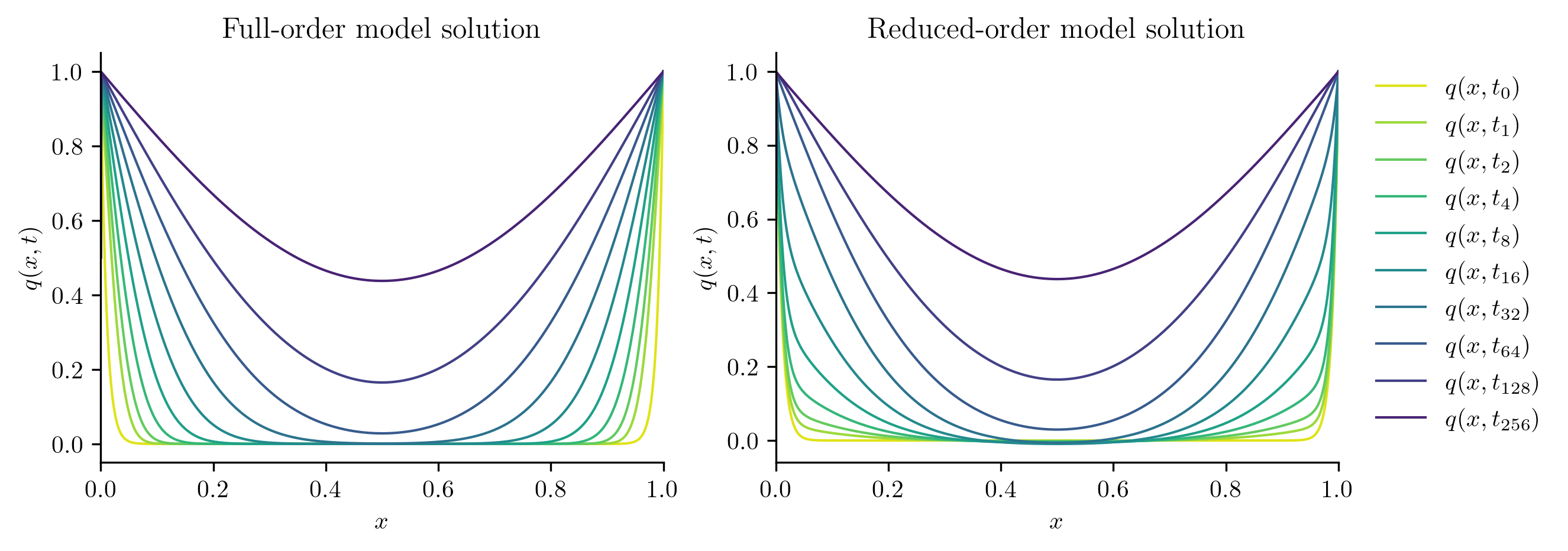

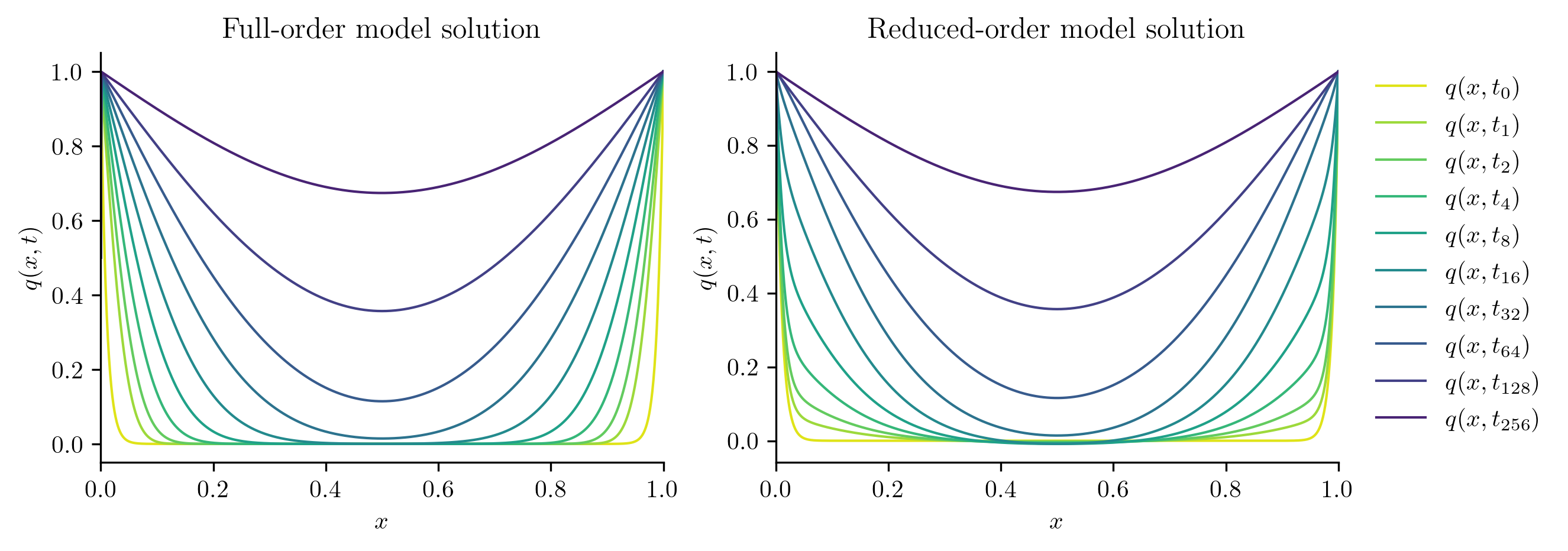

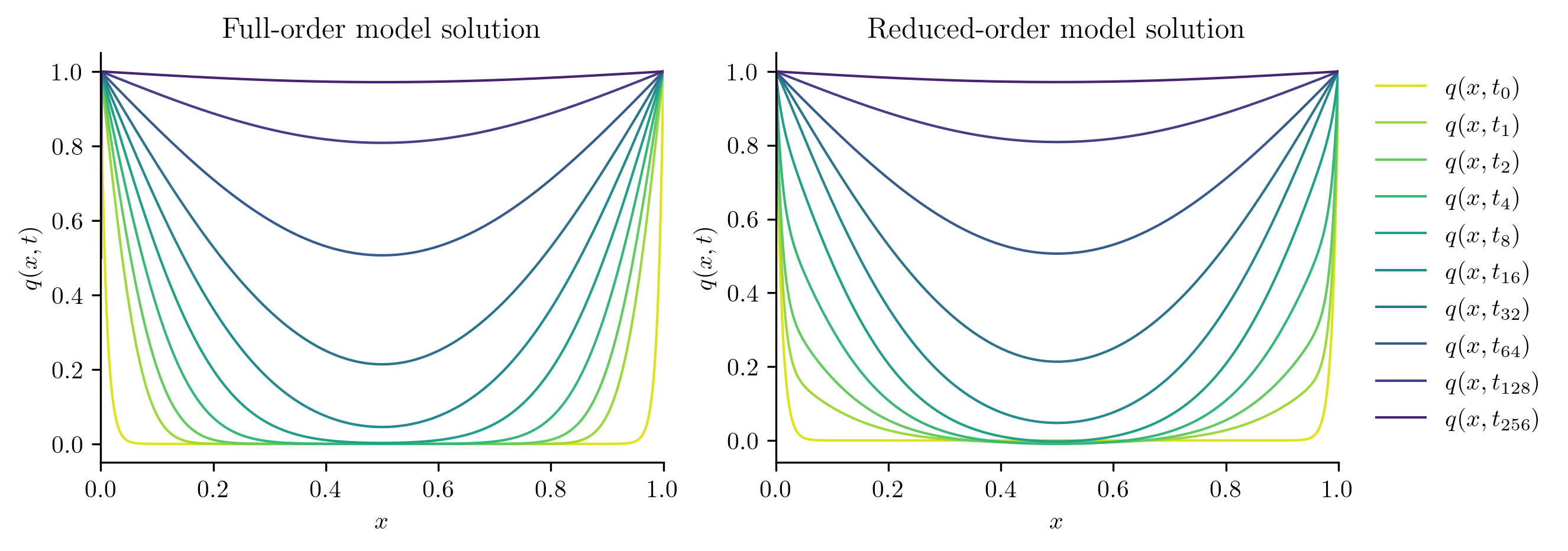

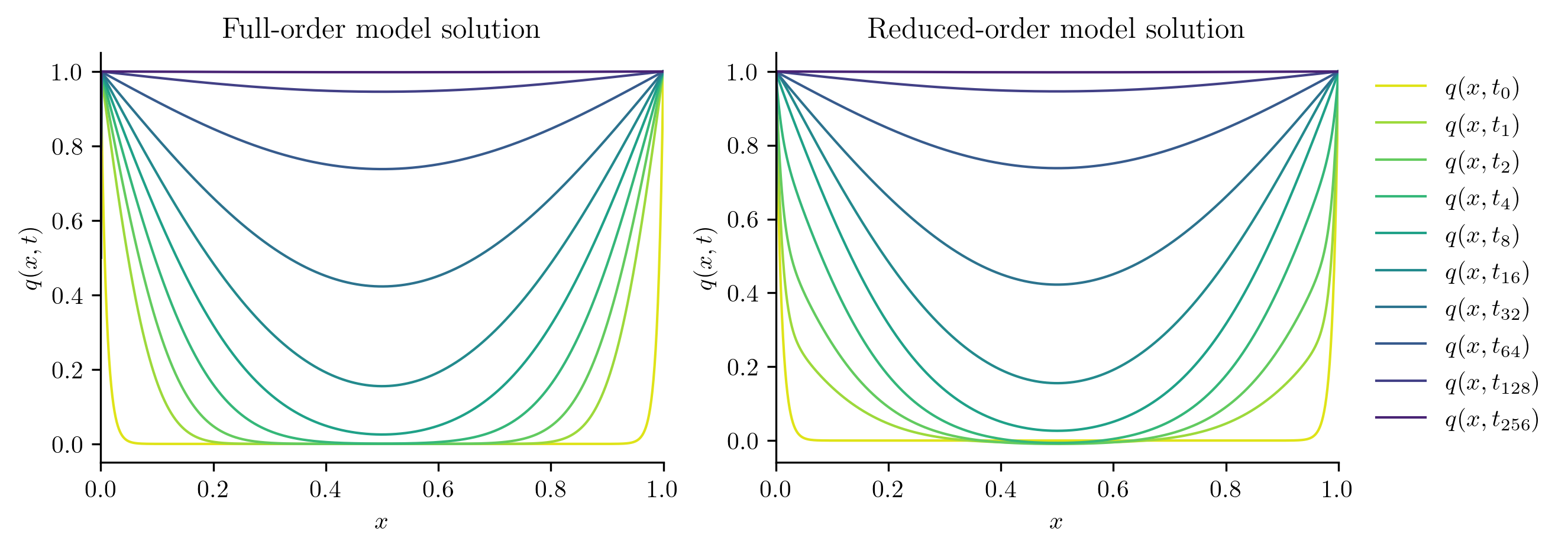

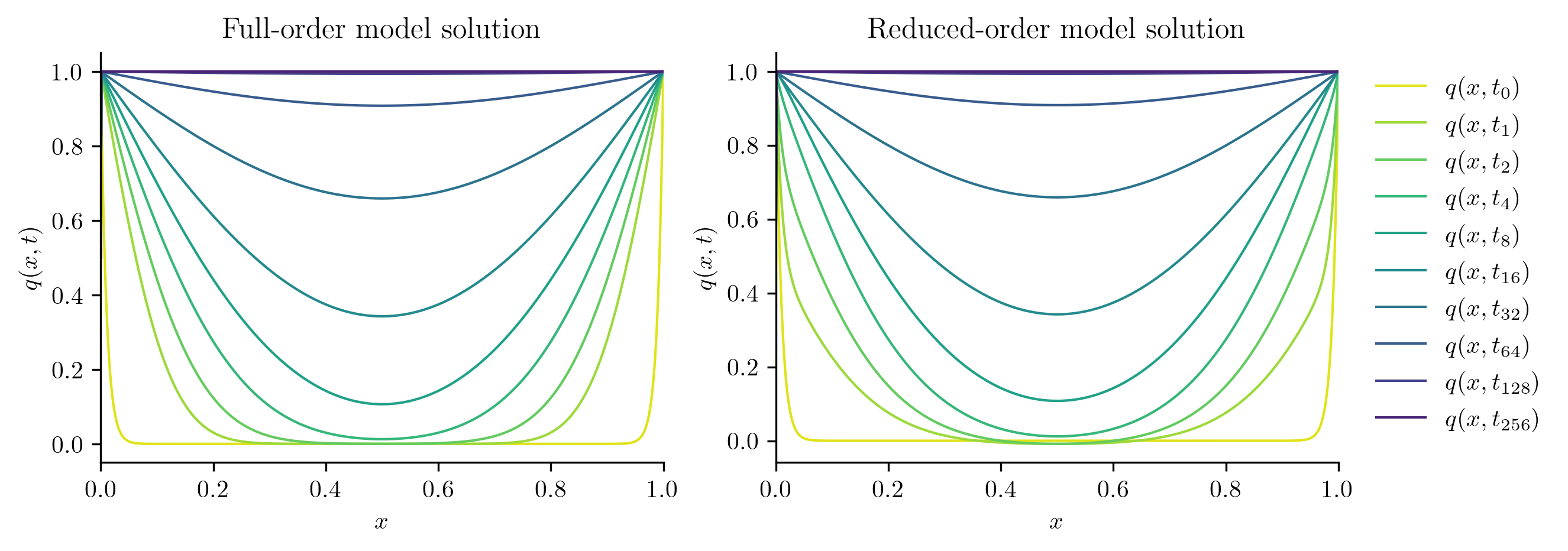

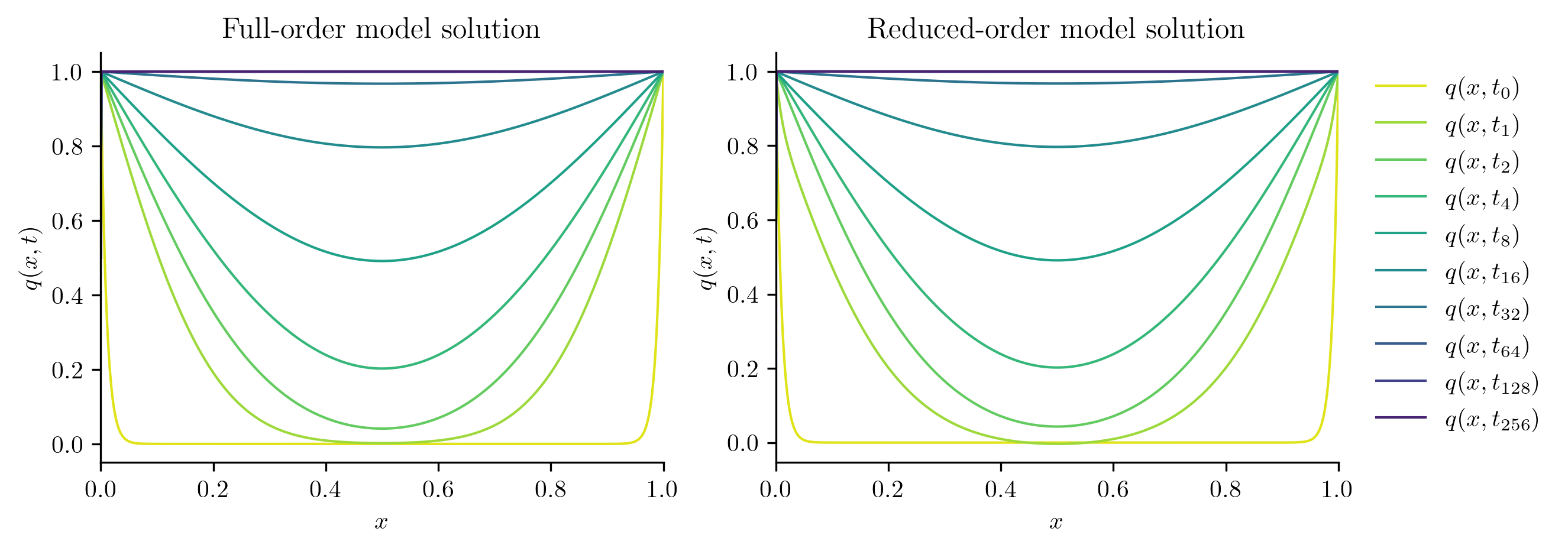

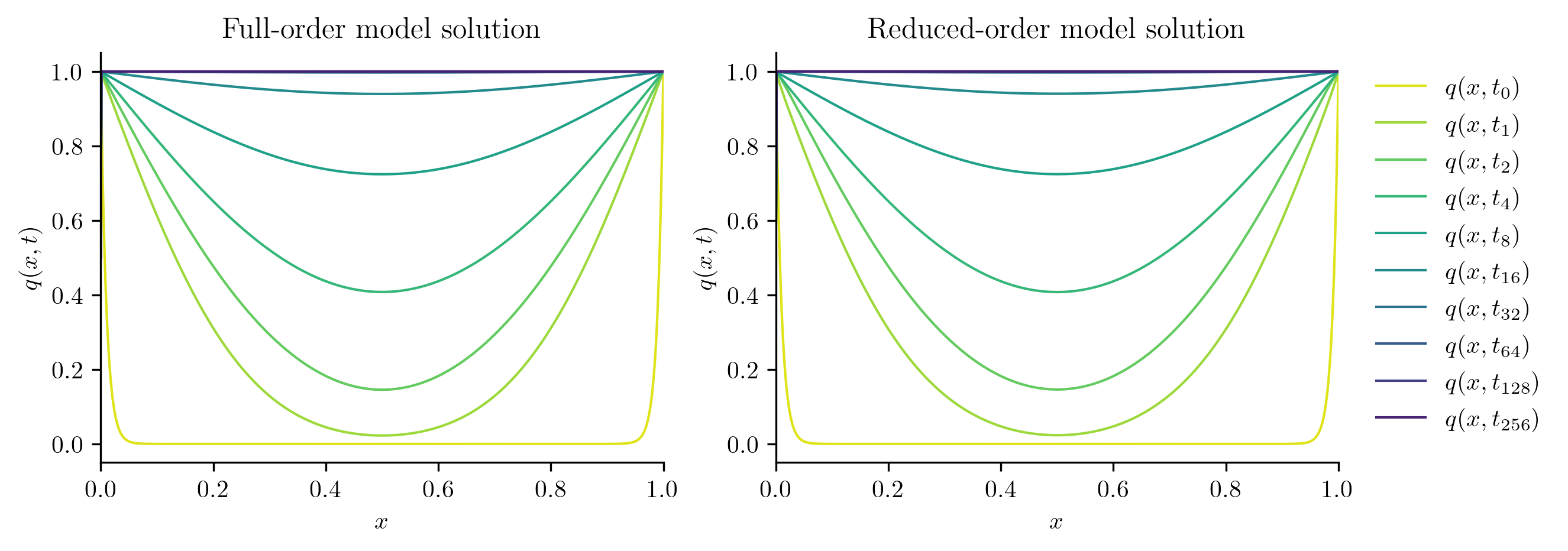

Next, we solve the FOM and ROM at new parameter values not included in the training set.

test_parameters = np.sqrt(training_parameters[:-1] * training_parameters[1:])

print(test_parameters)

[0.12915497 0.21544347 0.35938137 0.59948425 1. 1.66810054

2.7825594 4.64158883 7.74263683]

errors = []

for mu in test_parameters:

with opinf.utils.TimedBlock("Full-order solve"):

Q_FOM = full_order_solve(mu, t_all)

with opinf.utils.TimedBlock("Reduced-order solve"):

Q_ROM = rom.predict(mu, q0, t_all, method="BDF")

plot_two_datasets(

Q_FOM,

"Full-order model solution",

Q_ROM,

"Reduced-order model solution",

)

plt.show()

errors.append(opinf.post.frobenius_error(Q_FOM, Q_ROM)[1])

Full-order solve...done in 0.34 s.

Reduced-order solve...done in 0.01 s.

Full-order solve...done in 0.36 s.

Reduced-order solve...done in 0.01 s.

Full-order solve...done in 0.35 s.

Reduced-order solve...done in 0.01 s.

Full-order solve...done in 0.38 s.

Reduced-order solve...done in 0.01 s.

Full-order solve...done in 0.39 s.

Reduced-order solve...done in 0.01 s.

Full-order solve...done in 0.41 s.

Reduced-order solve...done in 0.01 s.

Full-order solve...done in 0.42 s.

Reduced-order solve...done in 0.01 s.

Full-order solve...done in 0.45 s.

Reduced-order solve...done in 0.01 s.

Full-order solve...done in 0.45 s.

Reduced-order solve...done in 0.01 s.

for mu, err in zip(test_parameters, errors):

print(f"Test parameter mu = {mu:.6f}: error = {err:.4%}")

Test parameter mu = 0.129155: error = 4.2571%

Test parameter mu = 0.215443: error = 2.7810%

Test parameter mu = 0.359381: error = 1.8725%

Test parameter mu = 0.599484: error = 1.3092%

Test parameter mu = 1.000000: error = 0.9282%

Test parameter mu = 1.668101: error = 0.6361%

Test parameter mu = 2.782559: error = 0.3880%

Test parameter mu = 4.641589: error = 0.1760%

Test parameter mu = 7.742637: error = 0.0350%

Stay Tuned

More examples are forthcoming.