External Inputs#

The fundamental goal of model reduction is to efficiently make physics-based predictions. Given synthetic or experimental data that was generated or collected under a certain set of conditions, we aim to construct a cost-effective model that produces accurate solutions under new sets of conditions. The first tutorial showed an example of evaluating a reduced-order model (ROM) for various initial conditions. This tutorial focuses on problems with external time-dependent inputs.

Problem Statement#

We consider a problem with external inputs that are parameterized by a scalar-valued function \(u:\RR\to\RR.\)

Governing Equations

Let \(\Omega = [0,L]\subset \mathbb{R}\) be the spatial domain indicated by the variable \(x\), and let \([0,T]\subset\mathbb{R}\) be the time domain with variable \(t\). We consider the one-dimensional heat equation with time-dependent Dirichlet boundary conditions,

where \(\alpha>0\) is constant and \(q(x,t)\) is the unknown state variable. This is a model for a one-dimensional rod conducting heat with a fixed initial heat profile. The temperature at the ends of the rod are governed by the input function \(u(t)\), but heat is allowed to diffuse through the rod and flow out at the ends of the domain.

Objective

Construct a reduced-order model (ROM) which can be solved rapidly to produce approximate solutions \(q(x, t)\) to the partial differential equation given above for various choices of the input function \(u(t)\). In addition, we will only observe data over a limited time interval \(t \in [0, T']\) with \(T' < T\), then use the ROM to predict the solution for the entire time domain \([0, T]\). Hence, the ROM will be predictive in time and predictive in the inputs.

import numpy as np

import scipy.sparse

import matplotlib.pyplot as plt

import opinf

opinf.utils.mpl_config()

Single Training Trajectory#

In this section a ROM is trained using data collected for a single choice of the input function \(u(t).\)

Full-order Model Definition#

As in the last tutorial, we use a centered finite difference approximation for the spatial derivative to arrive at a system of \(n\) ordinary differential equations. This time, due to the nonzero boundary conditions, the system takes the form

where \(\q:\RR\to\RR^n\), \(\A\in\RR^{n\times n}\), and \(\B\in\RR^{n}\). The system (8) is the full-order model (FOM), which we will use to generate training data for the time domain \([0, T'] \subset [0, T]\).

Discretization details

We take an equidistant grid \(\{x_i\}_{i=0}^{n+1} \subset \Omega\),

The boundary conditions prescribe \(q(x_0,t) = q(x_{n+1},t) = u(t)\). Our goal is to compute \(q(x,t)\) at the interior spatial points \(x_{1},x_{2},\ldots,x_{n}\) for various \(t\in[0,T]\), so we consider the state vector \(\q(t) = [~q(x_{1}, t)~~\cdots~~q(x_{n}, t)~]\trp\in\RR^n\) and derive a system governing the evolution of \(\q(t)\) in time.

Approximating the spatial derivative with a central finite difference approximation,

and using the boundary conditions \(q(0,t) = q(L,t) = u(t)\), we arrive at the following matrices for the FOM.

Training Data Generation#

Let \(L = 1\), \(T = 1\), and set \(\alpha = 100\). We begin by solving the FOM described above, recording the solution every \(\delta t = 10^{-3}\) time units for a single choice of the input function \(u(t)\), yielding \(10^3 + 1 = 1001\) total time steps (1000 steps past the initial condition). We will assume that we can only observe the first \(k = 200\) time steps and use the ROM to predict the remaining \(801\) steps. Our training input function is

def training_input(tt):

return np.ones_like(tt) + np.sin(4 * np.pi * tt) / 4

Full-order solve...done in 0.40 s.

Spatial domain: x.shape=(1023,)

Spatial step size: dx=0.0009765625

Full time domain: t_all.shape=(1001,)

Training time domain: t.shape=(200,)

Temporal step size: dt=0.001000

Full-order matrix A: A.shape=(1023, 1023)

Full-order vector B: B.shape=(1023,)

Initial condition: q0.shape=(1023,)

All FOM solutions: Q_all.shape=(1023, 1001)

Training snapshots: Q.shape=(1023, 200)

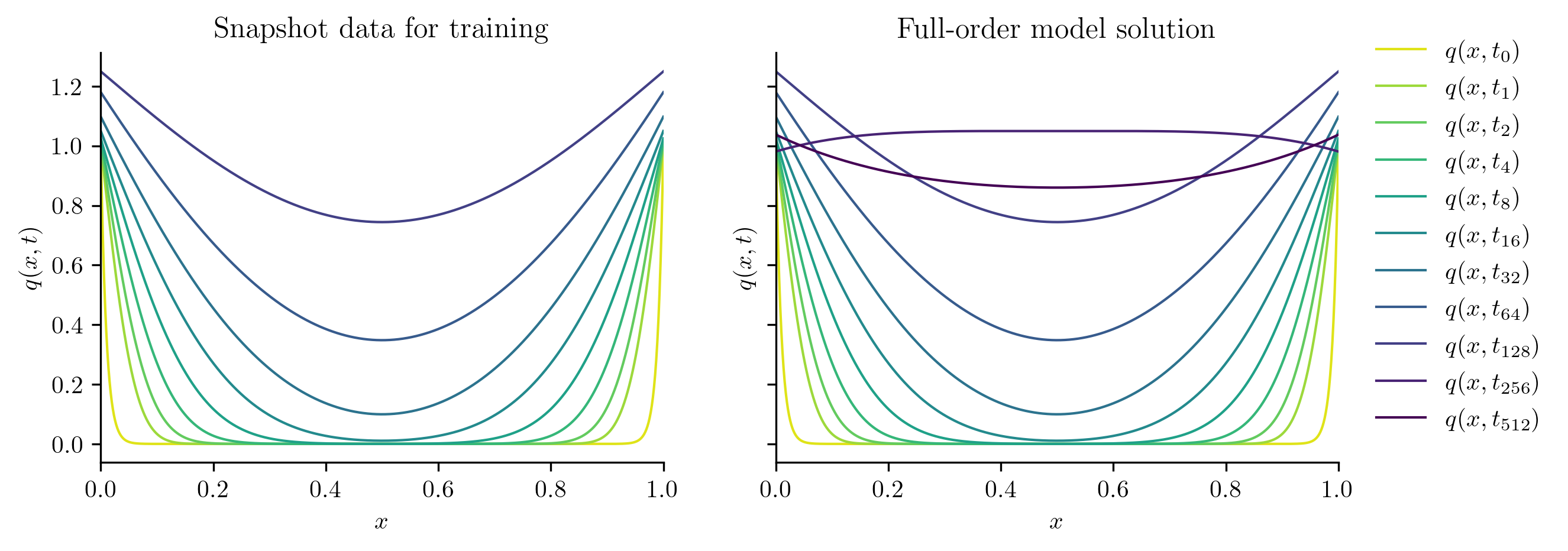

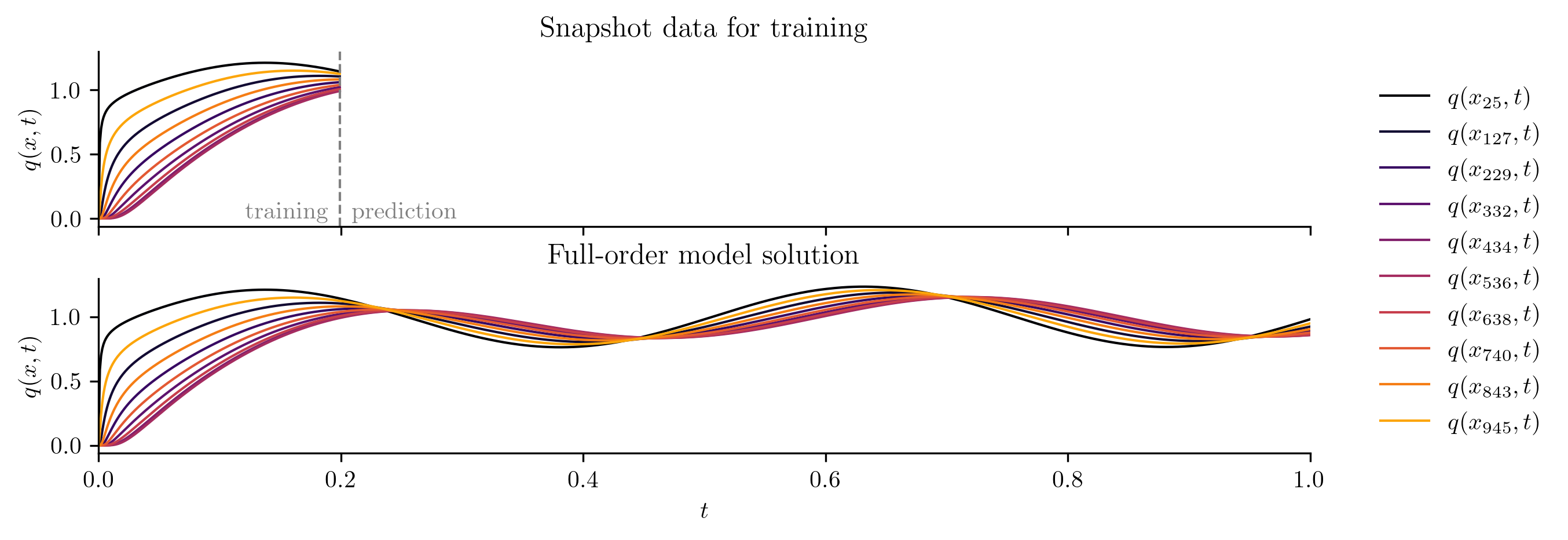

The following code visualizes the training data and the full FOM solution set by plotting a few snapshots over the spatial domain and the time evolution of the snapshots at a few spatial locations.

plot_two_datasets(

Q,

Q_all,

training_input,

"Snapshot data for training",

"Full-order model solution",

cutoff=t[-1],

)

ROM Construction#

We now have snapshot data \(\Q \in \RR^{n \times k}\), but to learn a model with external inputs, we need training data for the inputs as well as for the snapshots. Define the vector

which collects the values of the training input function at the same times as the training snapshots.

U = training_input(t)

print(f"Training snapshots:\t{Q.shape=}")

print(f"Training inputs:\t{U.shape=}")

Training snapshots: Q.shape=(1023, 200)

Training inputs: U.shape=(200,)

We will use a opinf.basis.PODBasis to reduce the dimension of the snapshot training data, which approximates the discretized state vector as \(\q(t) \approx \Vr\qhat(t)\) for some \(\Vr\in\RR^{n\times r}\) with orthonormal columns and \(\qhat(t)\in\RR^{r}\), with and \(r\ll n\).

Input training data are not typically compressed with dimensionality reduction or subjected to other pre-processing routines.

Because the FOM (8) has the linear-time invariant form \(\ddt\q(t) = \A\q(t) + \B u(t)\), we seek a ROM with the same structure, i.e.,

Data for the time derivative \(\ddt\qhat(t)\) are estimated in this example with sixth-order finite differences using opinf.ddt.UniformFiniteDifferencer.

The underlying least-squares problem to determine \(\Ahat\) and \(\Bhat\) is given by

where \(\qhat_j = \qhat(t_j)\in\RR^{r}\) and \(u_j = u(t_j)\in\RR\) are the state snapshots and input data, respectively, and \(\dot{\qhat}_j \approx \ddt\qhat(t)|_{t=t_j}\in\RR^{r}\) are the estimated time derivatives.

Why Use the Same Structure?

An OpInf ROM should have the same structure as an intrusive Galerkin ROM. The Galerkin ROM for (8) is derived by substituting in the approximation \(\q(t)\approx\Vr\qhat(t)\), yielding

Next, left multiply by \(\Vr\trp\) and use the fact that \(\Vr\trp\Vr = \I\) to get the following:

where \(\tilde{\A} = \Vr\trp\A\Vr \in \RR^{r\times r}\) and \(\tilde{\B} = \Vr\trp\B\in\RR^{r}\). Note that this ROM has the same input function \(u(t)\) as the FOM.

Training input data are passed to opinf.roms.ROM.fit() as the inputs argument.

rom = opinf.ROM(

basis=opinf.basis.PODBasis(residual_energy=1e-6),

ddt_estimator=opinf.ddt.UniformFiniteDifferencer(t, "ord6"),

model=opinf.models.ContinuousModel("AB"),

)

with opinf.utils.TimedBlock("Fitting OpInf ROM"):

rom.fit(Q, inputs=U)

with opinf.utils.TimedBlock("Reduced-order solve"):

Q_ROM = rom.predict(q0, t_all, input_func=training_input, method="BDF")

Fitting OpInf ROM...done in 0.01 s.

Reduced-order solve...done in 0.01 s.

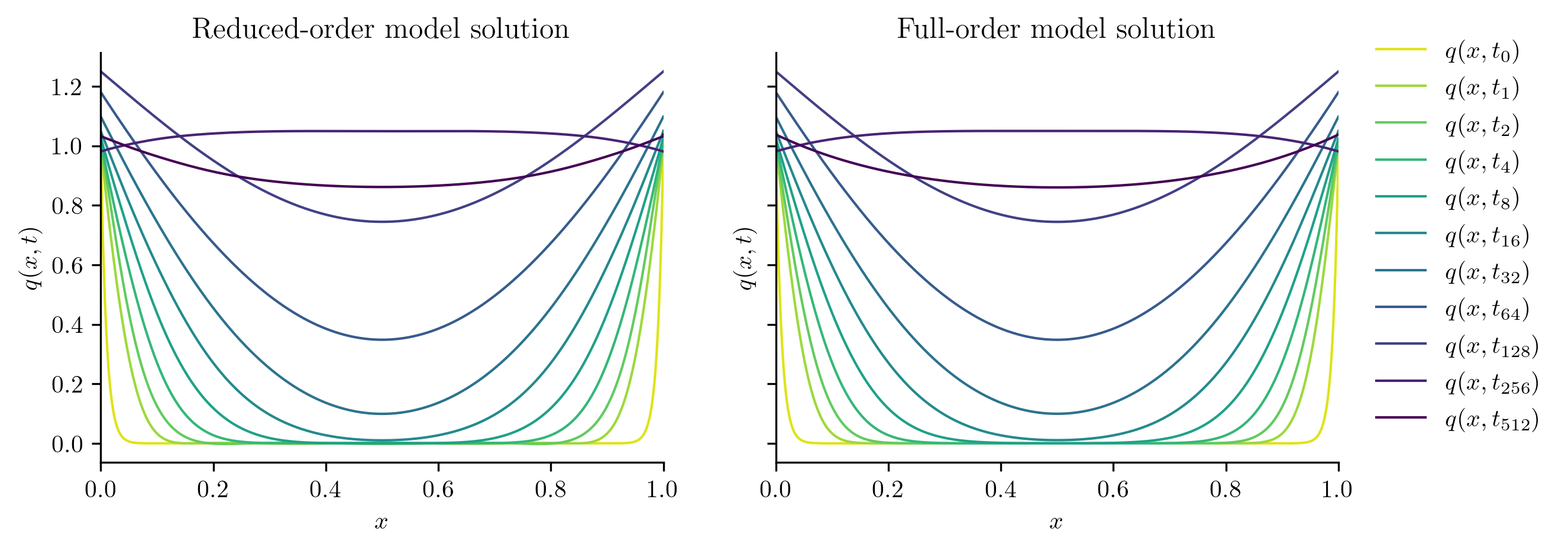

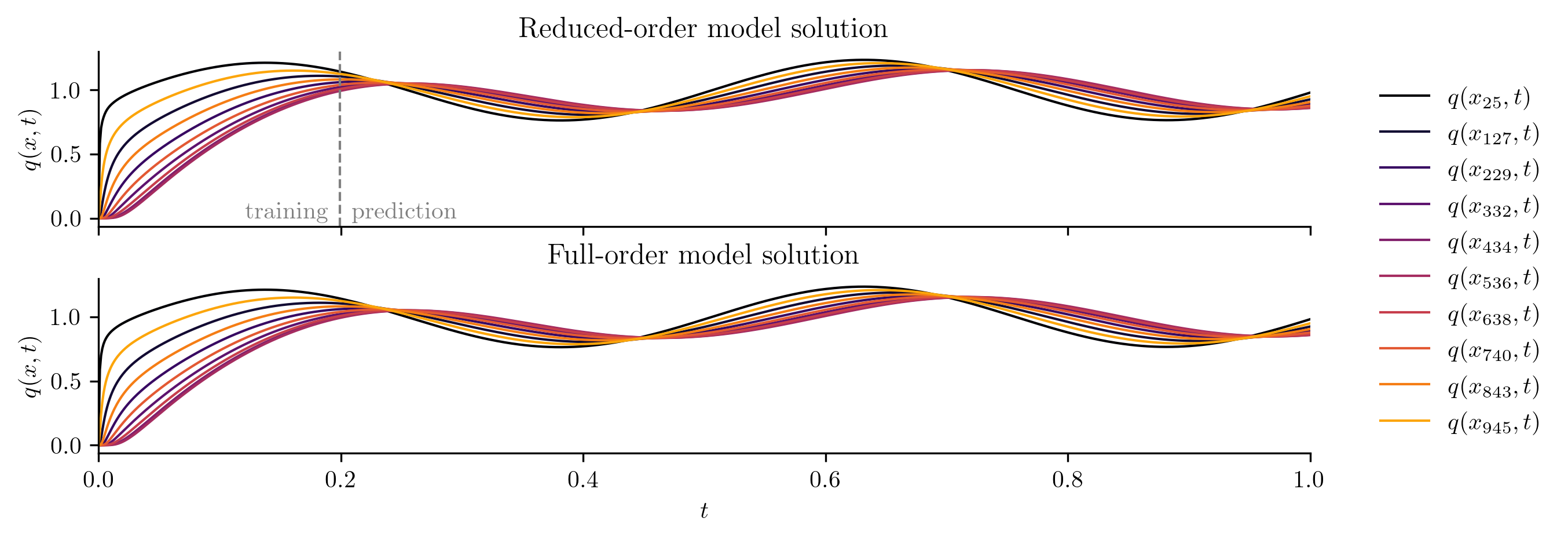

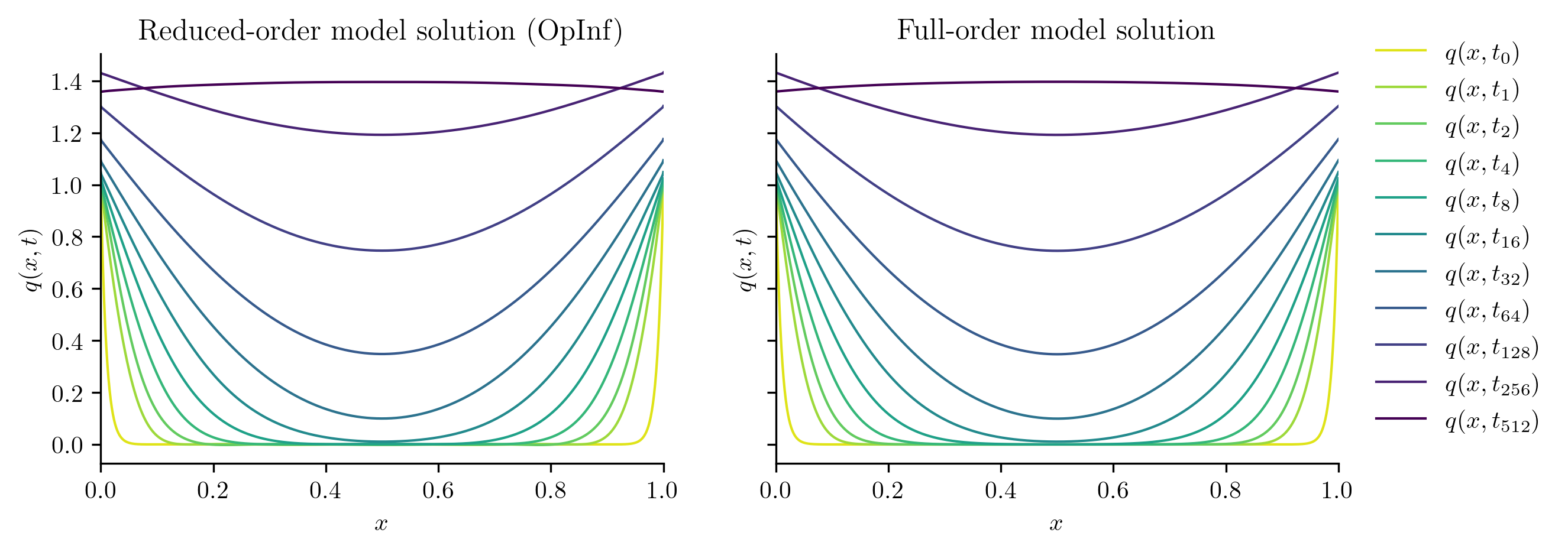

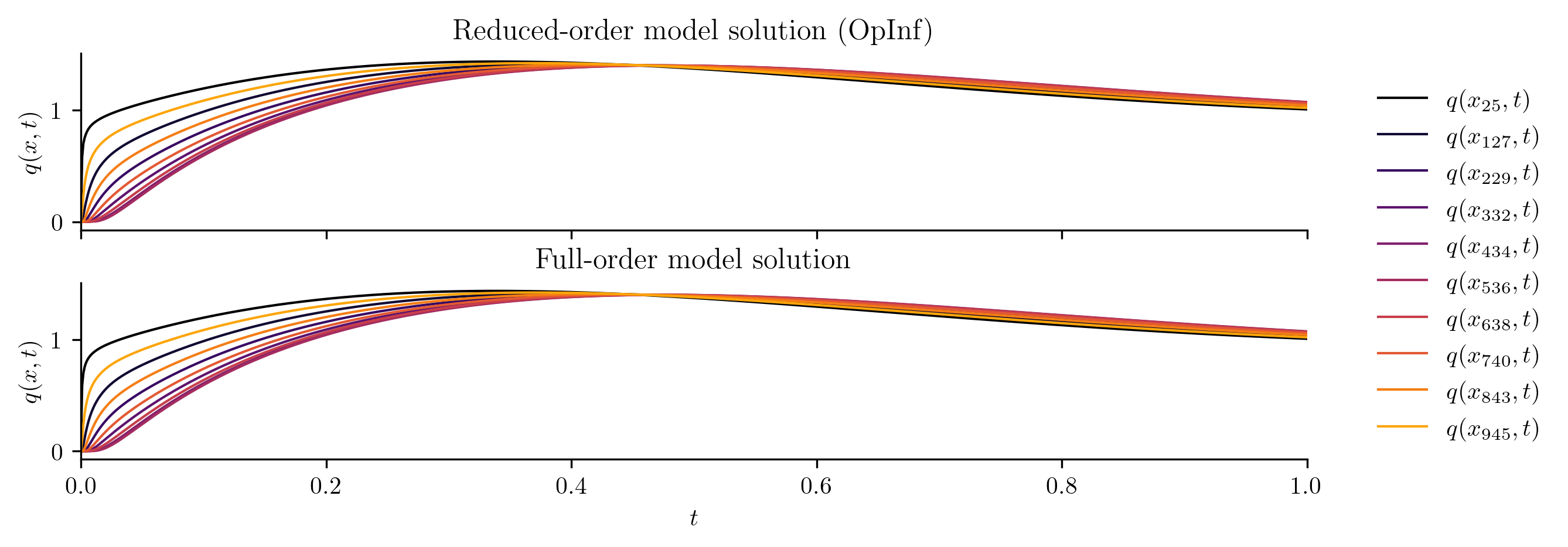

plot_two_datasets(

Q_ROM,

Q_all,

training_input,

"Reduced-order model solution",

"Full-order model solution",

cutoff=t[-1],

)

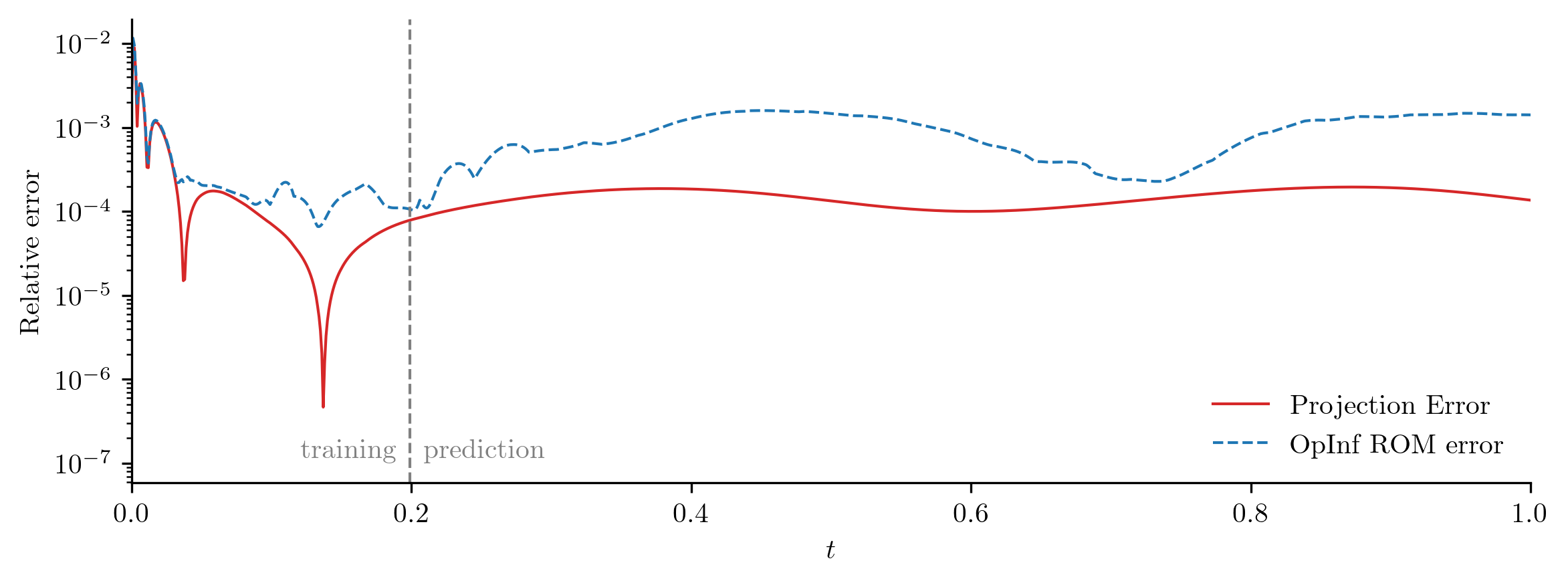

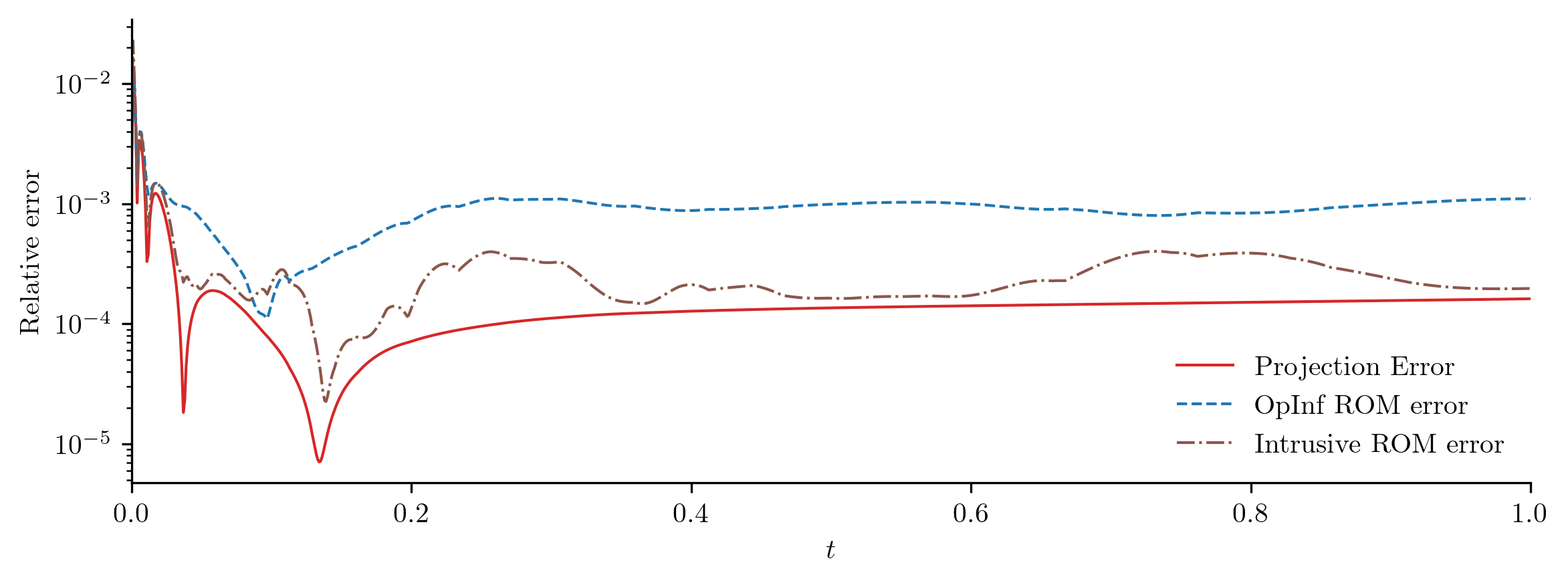

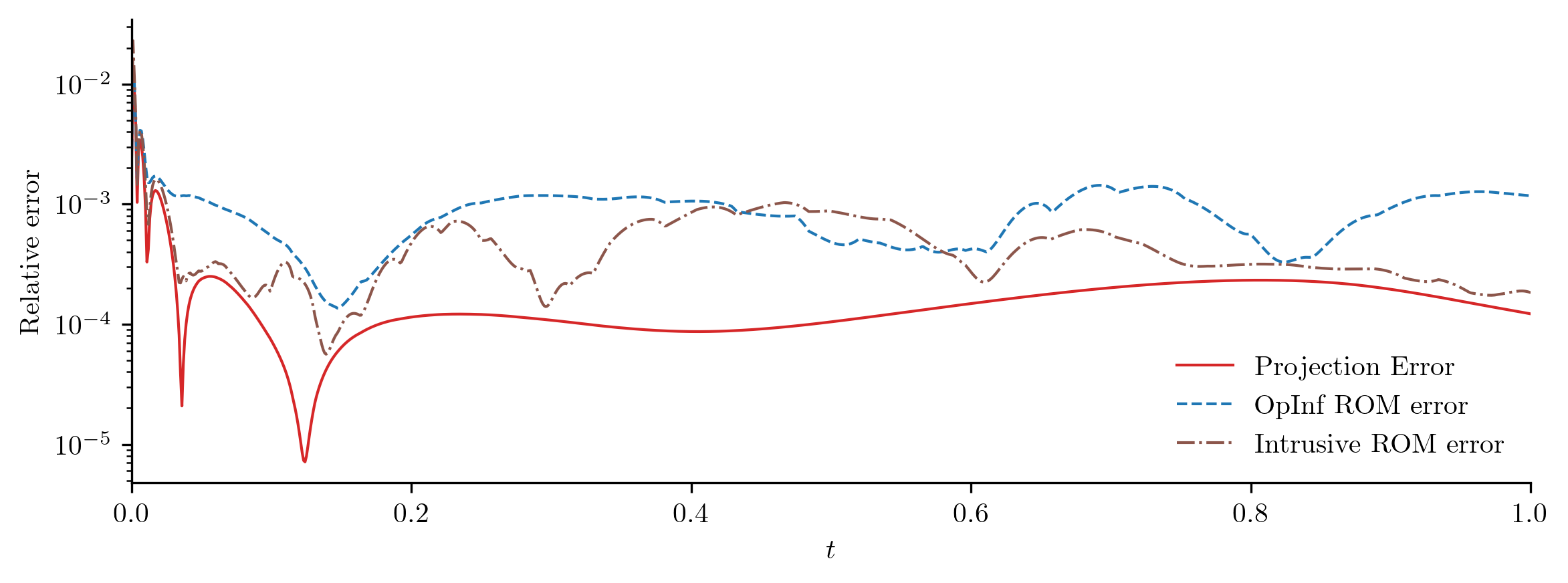

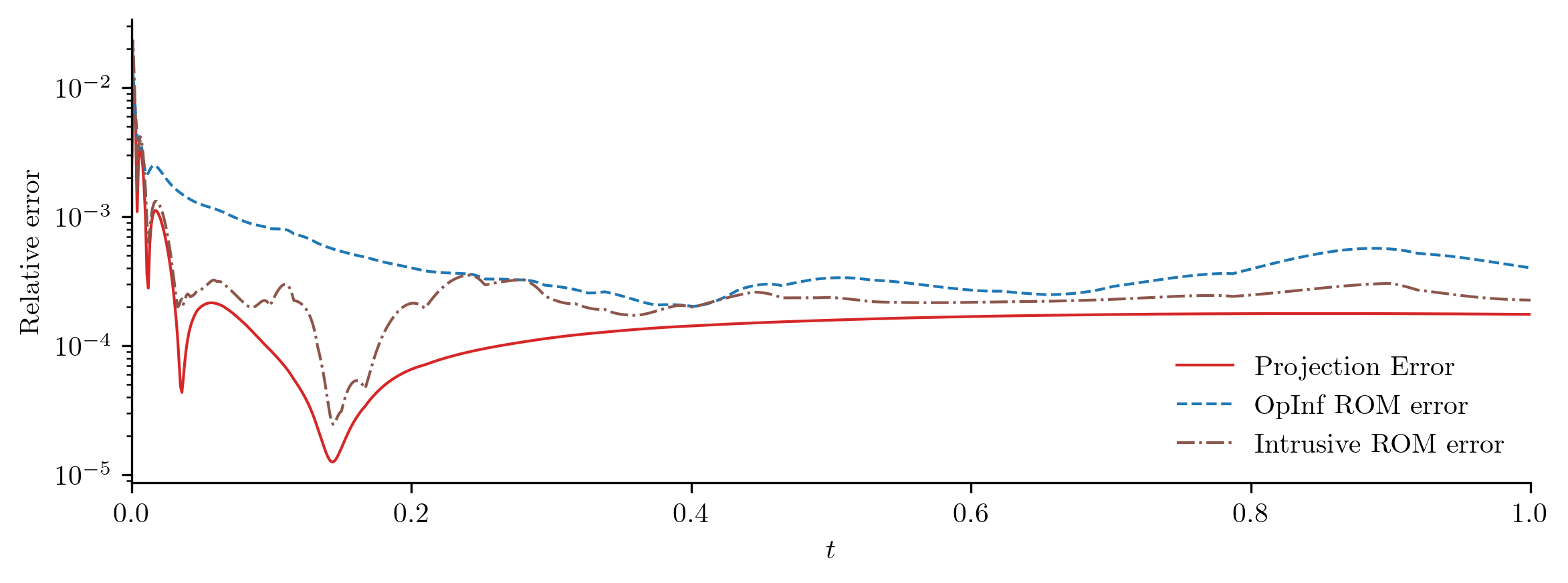

For a closer look at the difference between the FOM and ROM solutions, we compute the relative \(\ell_2\)-norm error of the ROM solution as a function of time using opinf.post.lp_error() and the relative Forbenius-norm error using opinf.post.frobenius_error().

plot_errors_over_time(Q_all, rom.basis, Q_ROM, "OpInf ROM error", cutoff=t[-1])

error_opinf = opinf.post.frobenius_error(Q_all, Q_ROM)[1]

print(f"OpInf ROM error:\t{error_opinf:.4e}")

OpInf ROM error: 8.8303e-04

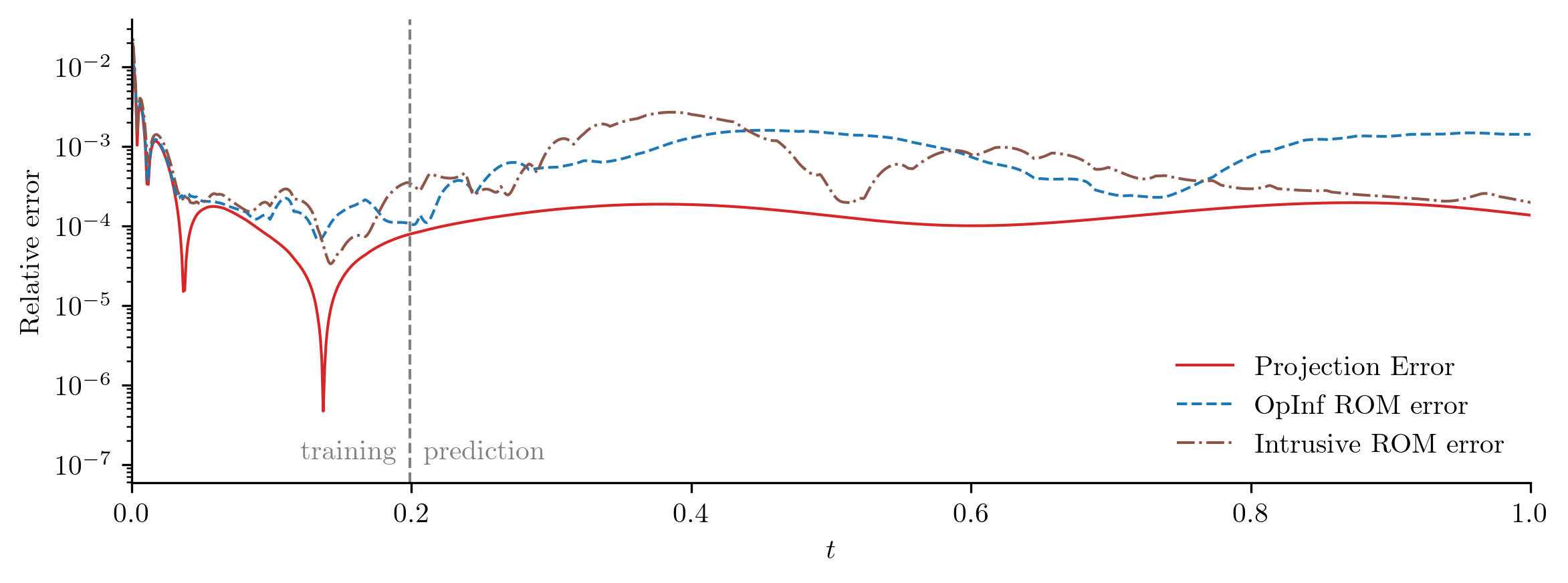

Comparison to the Intrusive Galerkin ROM#

The classical intrusive Galerkin ROM for this problem is given by

where \(\tilde{\A} = \Vr\trp\A\Vr \in \RR^{r\times r}\) and \(\tilde{\B} = \Vr\trp\B\in\RR^{r}\). Here, we form this ROM explicitly (using the same basis matrix \(\Vr\) as before) and compare it to our existing OpInf ROM.

rom_intrusive = opinf.ROM(

basis=rom.basis,

model=fom.galerkin(rom.basis.entries), # Explicitly project FOM operators.

)

with opinf.utils.TimedBlock("Reduced-order model solve (intrusive)"):

Q_ROM_intrusive = rom_intrusive.predict(

q0, t_all, input_func=training_input, method="BDF"

)

Reduced-order model solve (intrusive)...done in 0.01 s.

plot_errors_over_time(

Q_all,

rom.basis,

Q_ROM,

"OpInf ROM error",

Q_ROM_intrusive,

"Intrusive ROM error",

cutoff=t[-1],

)

error_intrusive = opinf.post.frobenius_error(Q_all, Q_ROM_intrusive)[1]

error_projection = rom.basis.projection_error(Q_all, relative=True)

print(

"Relative Frobenius-norm errors",

"-" * 33,

f"Projection error:\t{error_projection:%}",

f"OpInf ROM error:\t{error_opinf:%}",

f"Intrusive ROM error:\t{error_intrusive:%}",

sep="\n",

)

Relative Frobenius-norm errors

---------------------------------

Projection error: 0.019187%

OpInf ROM error: 0.088303%

Intrusive ROM error: 0.089150%

In this experiment, the OpInf ROM and the corresponding intrusive ROM have comparable error, even though the OpInf ROM is calibrated without intrusive access to the FOM.

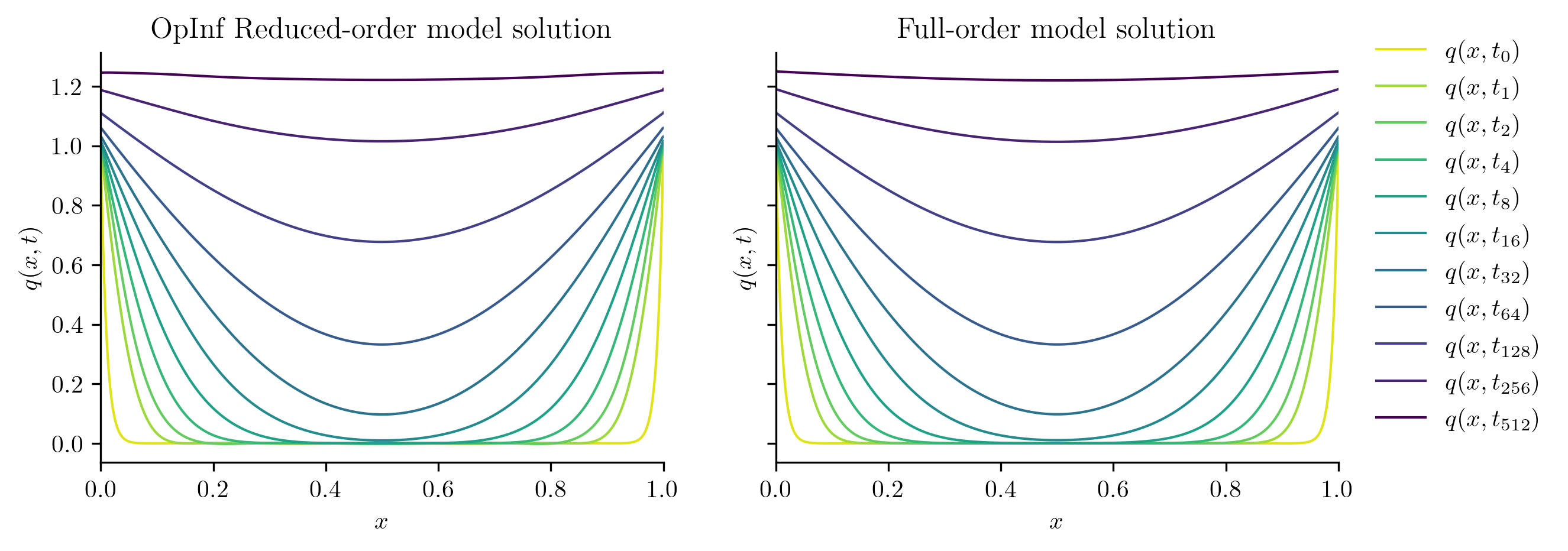

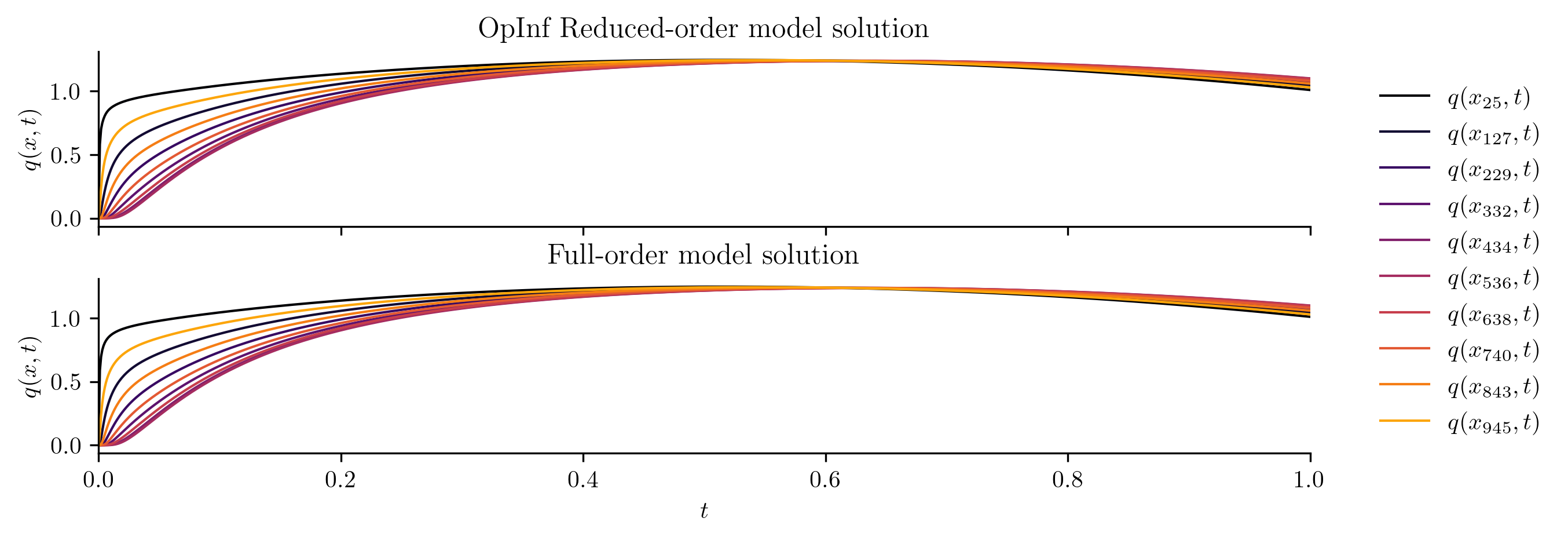

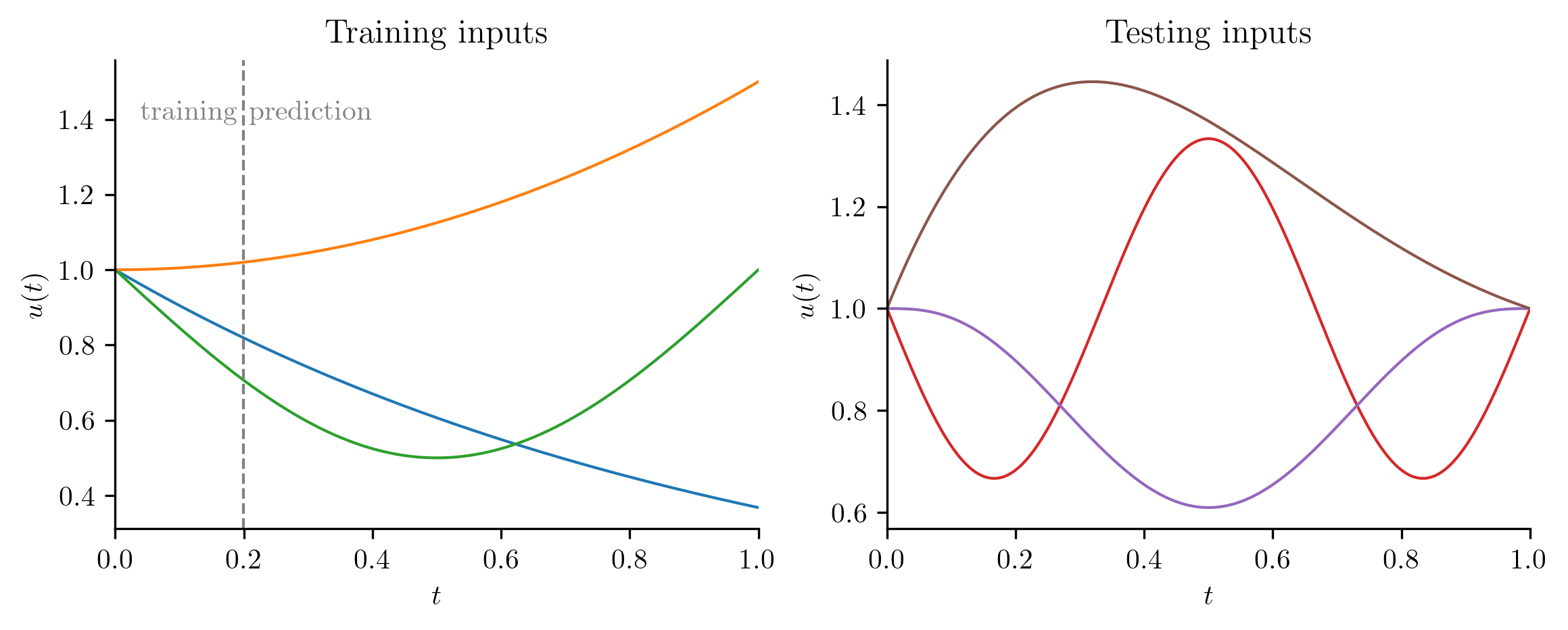

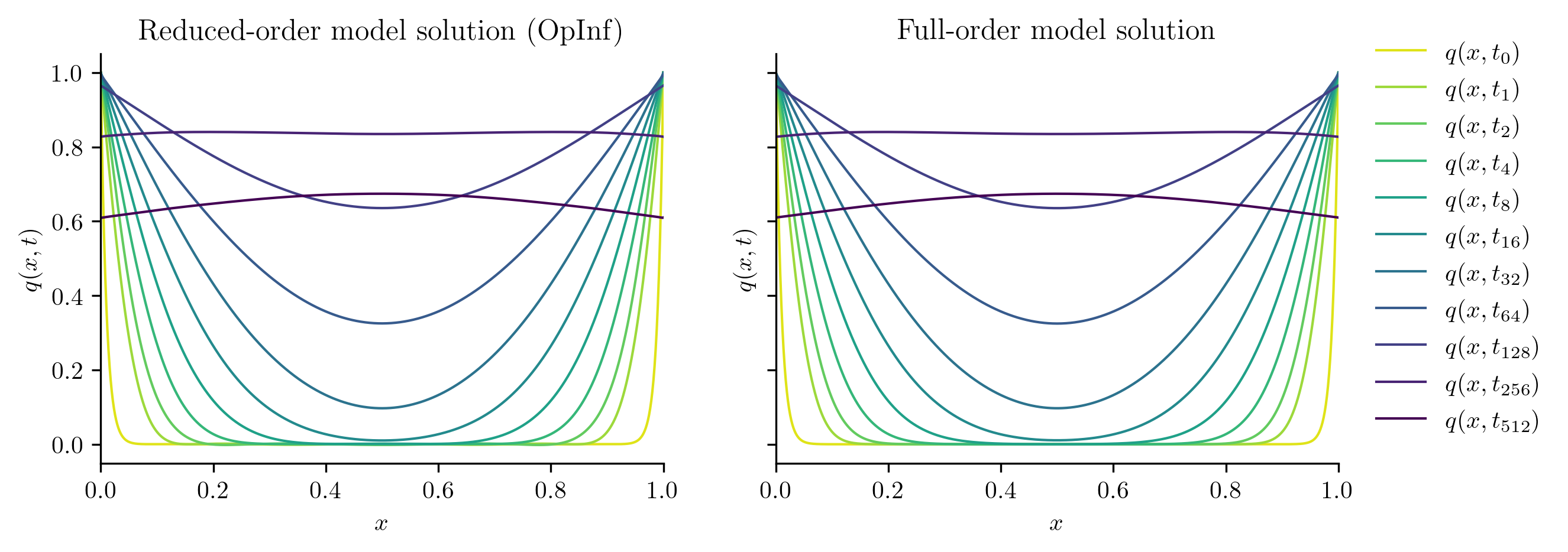

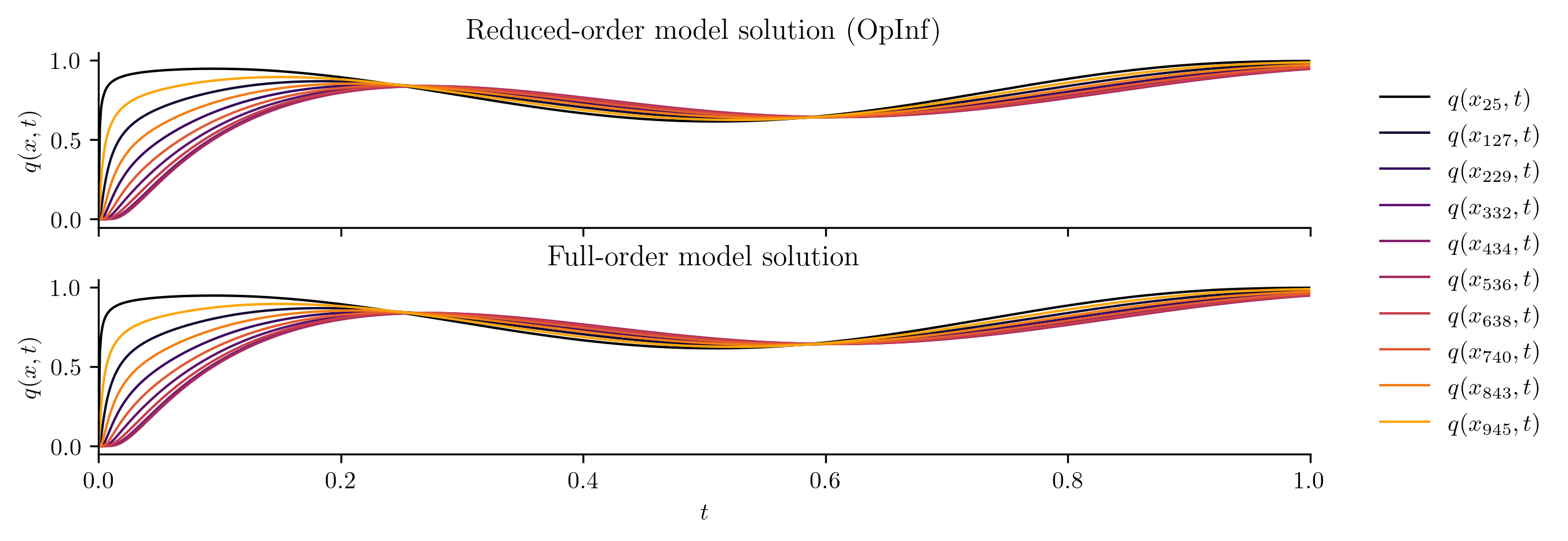

Generalization to New Inputs#

The previous experiment uses a single choice of \(u(t)\) for the training and for the prediction in time. Now, we define a new choice of input function \(u(t)\),

and evaluate the FOM and ROM for this new input.

def test_input(t):

return 1 + t * (1 - t)

with opinf.utils.TimedBlock("Full-order solve"):

Qtest_FOM = full_order_solve(t_all, test_input)

with opinf.utils.TimedBlock("Reduced-order solve (OpInf)"):

Qtest_ROM = rom.predict(q0, t_all, test_input, method="BDF")

with opinf.utils.TimedBlock("Reduced-order solve (intrusive)"):

Qtest_ROM_intrusive = rom_intrusive.predict(

q0, t_all, test_input, method="BDF"

)

Full-order solve...done in 0.38 s.

Reduced-order solve (OpInf)...done in 0.01 s.

Reduced-order solve (intrusive)...done in 0.01 s.

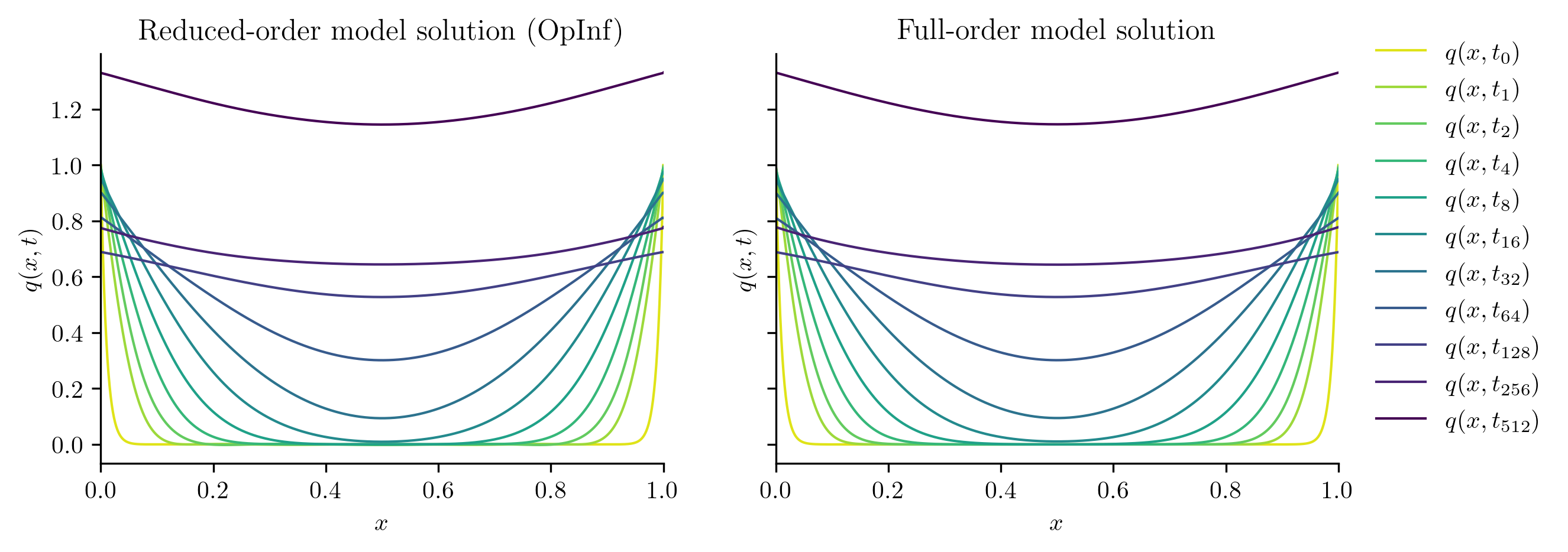

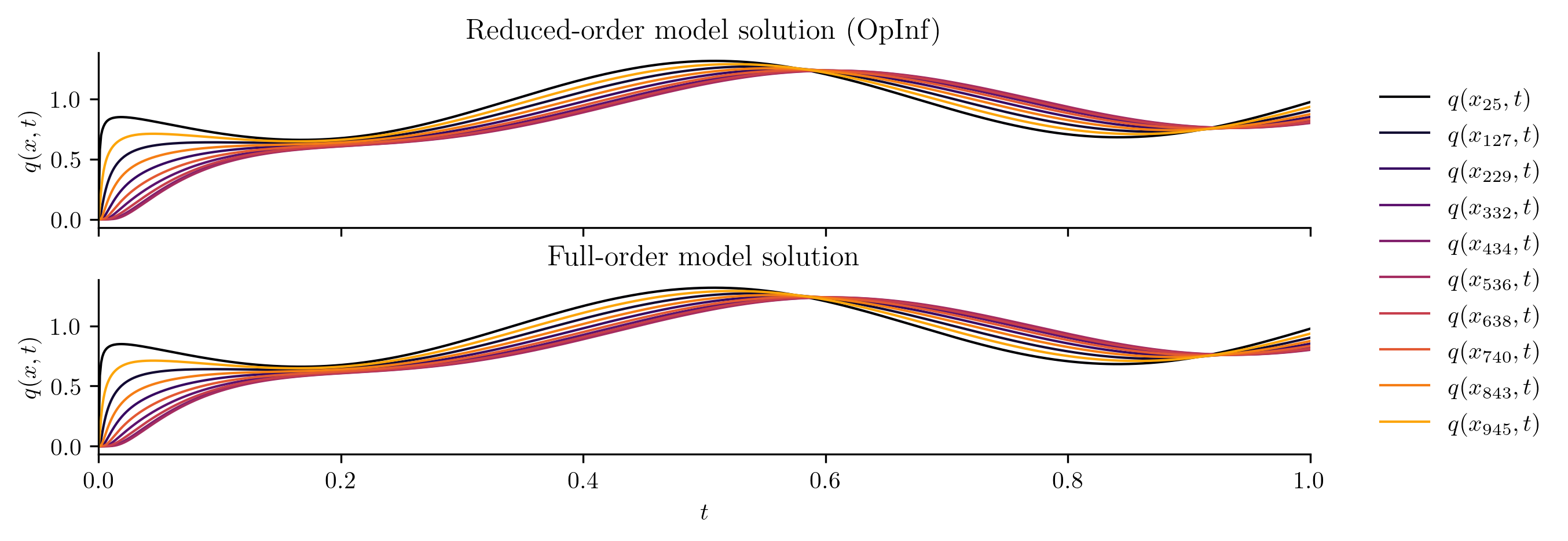

plot_two_datasets(

Qtest_ROM,

Qtest_FOM,

test_input,

"OpInf Reduced-order model solution",

"Full-order model solution",

)

plot_errors_over_time(

Qtest_FOM,

rom.basis,

Qtest_ROM,

"OpInf ROM error",

Qtest_ROM_intrusive,

"Intrusive ROM error",

)

Both ROMs perform well with a new input function, but the intrusive ROM performs slightly better than the OpInf ROM. This is typical; intrusive ROMs are often more robust and generalizable than standard OpInf ROMs, but OpInf ROMs tend to reproduce training data better than intrusive ROMs.

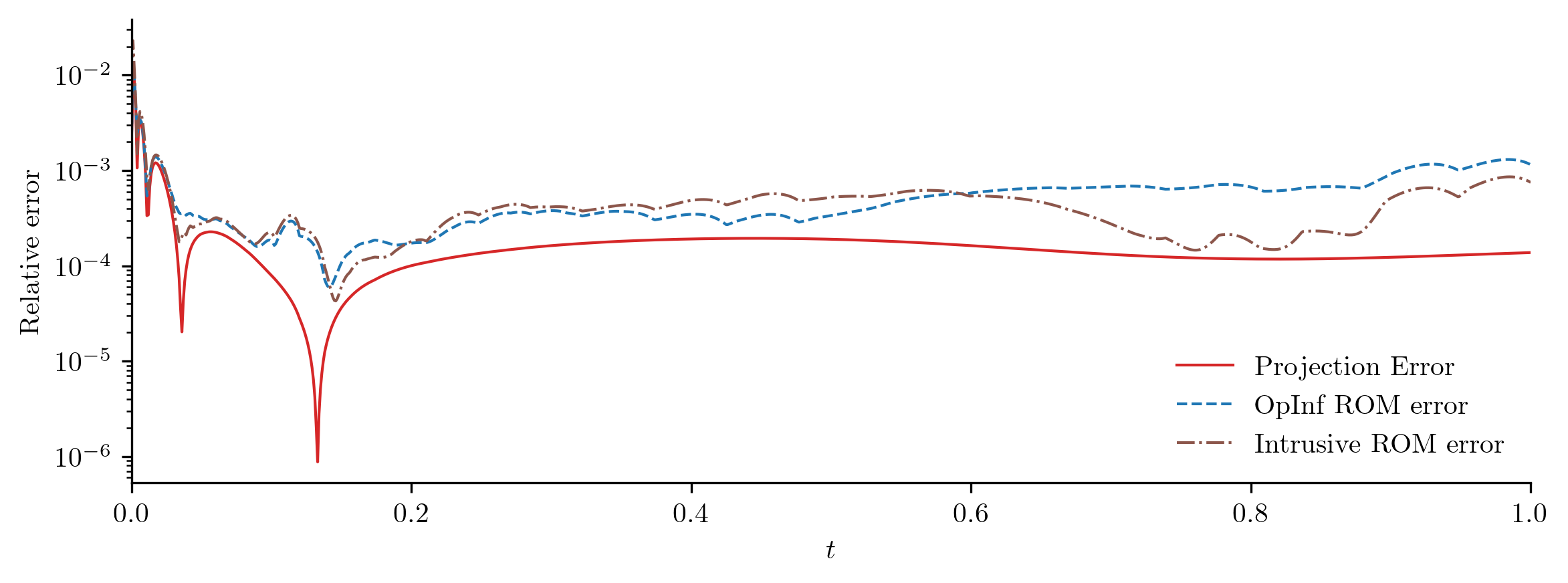

Multiple Training Trajectories#

If data corresponding to several choices of the input function \(u(t)\) are available for training, we collect a list of snapshot matrices and a list of corresponding inputs to pass to fit().

Training Data Generation#

Below, we solve the PDE using the three input functions for training data:

The following input functions are used for testing.

training_inputs = [

lambda t: np.exp(-t),

lambda t: 1 + t**2 / 2,

lambda t: 1 - np.sin(np.pi * t) / 2,

]

testing_inputs = [

lambda t: 1 - np.sin(3 * np.pi * t) / 3,

lambda t: 1 + 25 * (t * (t - 1)) ** 3,

lambda t: 1 + np.exp(-2 * t) * np.sin(np.pi * t),

]

# Solve the full-order model for each training input and collect results.

Qs = [] # State snapshots.

Us = [] # Corresponding inputs.

for u in training_inputs:

Qs.append(full_order_solve(t, u))

Us.append(u(t))

rom = opinf.ROM(

basis=opinf.basis.PODBasis(residual_energy=1e-6),

ddt_estimator=opinf.ddt.UniformFiniteDifferencer(t, "ord6"),

model=opinf.models.ContinuousModel("AB"),

)

with opinf.utils.TimedBlock("Fitting OpInf ROM"):

rom.fit(Qs, inputs=Us)

rom_intrusive = opinf.ROM(

basis=rom.basis,

model=fom.galerkin(rom.basis.entries),

)

Fitting OpInf ROM...done in 0.03 s.

for i, u in enumerate(testing_inputs):

print(f"Test input function {i+1:d}")

with opinf.utils.TimedBlock("Full-order solve"):

Q_FOM = full_order_solve(t_all, u)

with opinf.utils.TimedBlock("Reduced-order solve (OpInf)"):

Q_ROM = rom.predict(q0, t_all, u, method="BDF")

with opinf.utils.TimedBlock("Reduced-order solve (intrusive)"):

Q_ROM_intrusive = rom_intrusive.predict(q0, t_all, u, method="BDF")

plot_two_datasets(

Q_ROM,

Q_FOM,

u,

"Reduced-order model solution (OpInf)",

"Full-order model solution",

)

plot_errors_over_time(

Q_FOM,

rom.basis,

Q_ROM,

"OpInf ROM error",

Q_ROM_intrusive,

"Intrusive ROM error",

)

Test input function 1

Full-order solve...done in 0.40 s.

Reduced-order solve (OpInf)...done in 0.01 s.

Reduced-order solve (intrusive)...done in 0.01 s.

Test input function 2

Full-order solve...done in 0.40 s.

Reduced-order solve (OpInf)...done in 0.01 s.

Reduced-order solve (intrusive)...done in 0.01 s.

Test input function 3

Full-order solve...done in 0.38 s.

Reduced-order solve (OpInf)...done in 0.01 s.

Reduced-order solve (intrusive)...done in 0.01 s.

Multi-dimensional Inputs

The examples in this tutorial use a scalar-valued input function \(u:\RR\to\RR\). For models with vector inputs \(\u:\RR\to\RR^m\) with \(m > 1\), training inputs are collected into a matrix with \(m\) rows:

This is the matrix used for the inputs argument of fit().