Getting Started#

The opinf package constructs reduced-order models for large dynamical systems.

Such systems often arise from the numerical solution of partial differentials equations.

In this introductory tutorial, we use operator inference (OpInf) to learn a reduced-order model for a simple heat equation.

This is a simplified version of the first numerical example in [PW16].

Problem Statement#

Governing Equations

For the spatial domain \(\Omega = [0,L]\subset \RR\) and the time domain \([t_0,t_f]\subset\RR\), consider the one-dimensional heat equation with homogeneous Dirichlet boundary conditions:

This is a model for a one-dimensional rod that conducts heat. The unknown state variable \(q(x,t)\) represents the temperature of the rod at location \(x\) and time \(t\); the temperature at the ends of the rod are fixed at \(0\) and heat is allowed to flow out of the rod at the ends.

Objective

Construct a low-dimensional system of ordinary differential equations, called the reduced-order model (ROM), which can be solved rapidly to produce approximate solutions \(q(x, t)\) to the partial differential equation given above. We will use OpInf to learn the ROM from high-fidelity data for one choice of initial condition \(q_0(x)\) and test its performance on new initial conditions.

We will make use of numpy, scipy, and matplotlib from the standard Python scientific stack, which are all automatically installed when opinf is installed.

The pandas library is also used later to consolidate and report results.

import numpy as np

import pandas as pd

import scipy.sparse

import scipy.integrate

import scipy.linalg as la

import matplotlib.pyplot as plt

import opinf

opinf.utils.mpl_config()

Training Data#

We begin by generating training data through a traditional numerical method. A spatial discretization of the governing equations with \(n\) degrees of freedom via finite differences or the finite element method leads to a linear semi-discrete system of \(n\) ordinary differential equations,

where \(\q:\RR\to\RR^n\), \(\A\in\RR^{n\times n}\), and \(\q_0\in\RR^n\). For this tutorial, we use central finite differences to construct this system.

Discretization details

For a given \(n\in\NN\), let \(\{x\}_{i=0}^{n+1}\) be an equidistant grid of \(n+2\) points on \(\Omega\), i.e.,

The boundary conditions prescribe \(q(x_0,t) = q(x_{n+1},t) = 0\). Our goal is to compute \(q(x, t)\) at the interior spatial points \(x_{1}, x_{2}, \ldots, x_{n}\) for various \(t \in [t_0,t_f].\) That is, we wish to compute the state vector

Introducing a central finite difference approximation for the spatial derivative,

yields the semi-discrete linear system

where

The system (5) is called the full-order model (FOM) or the high-fidelity model. The computational complexity of solving (5) depends on the dimension \(n\), which must often be large in order for \(\q(t)\) to approximate \(q(x,t)\) well over the spatial grid. Our goal is to construct a ROM that approximates the FOM, but whose computational complexity only depends on some smaller dimension \(r \ll n\).

No FOM? No Problem.

One key advantage of OpInf is that, because it learns a ROM from data alone, direct access to a FOM is not required. In this tutorial, we explicitly construct a FOM, but in practice, we only need the following:

Solution data to learn from, and

Some knowledge of the structure of the governing equations.

For this demo, we set \(L = 1\), \(t_0 = 0\), \(t_f = 1\), and use \(n = 2^{10} - 1 = 1023\) spatial degrees of freedom. We begin by solving the FOM with the initial condition

and record the solution every \(\delta t = 0.0025\) time units. This results in \(k = 401\) state snapshots (\(400\) time steps after the initial condition), which are organized into the snapshot matrix \(\Q\in\RR^{n\times k}\), where the \(j\)-th column is the solution trajectory at time \(t_j\):

Note that the initial condition \(\q_{0}\) is included as a column in the snapshot matrix.

The following code constructs the spatial and time domains, the FOM matrix \(\A\), the initial condition vector \(\q_0\), and solves the FOM with scipy.integrate.solve_ivp().

Full-order solve...done in 0.29 s.

Spatial domain size: x.shape=(1023,)

Spatial step size: dx=0.0009765625

Time domain size: t.shape=(401,)

Temporal step size: dt=0.002500

Full-order matrix A: A.shape=(1023, 1023)

Initial condition: q0.shape=(1023,)

Training snapshots: Q.shape=(1023, 401)

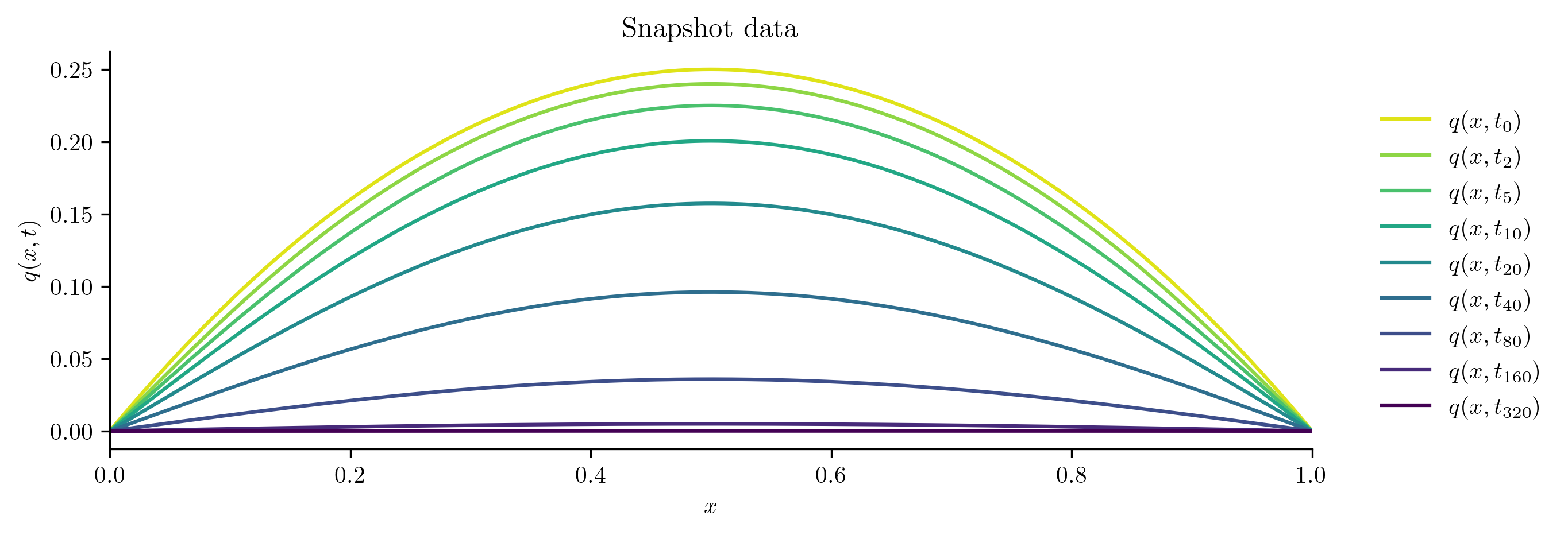

Next, we visualize the snapshots to get a sense of how the solution looks qualitatively.

plot_heat_data(Q, "Snapshot data")

In the figure, earlier times are lighter colors and later times are darker colors. This matches our intuition: initially there is more heat toward the center of the rod, which then diffuses out of the ends of the rod.

Operator Inference#

At this point, we have gathered some training data by simulating the FOM. We also have an initial condition and a time domain.

Name |

Symbol |

Code Variable |

|---|---|---|

State snapshots |

\(\Q\) |

|

Initial state |

\(\q_0\) |

|

Time domain |

\([t_0,t_f]\) |

|

Our task now is to construct a low-dimensional system whose solutions can be used as approximate solutions to the PDE. Below we show the overall process, then explain each piece that happens under the hood.

import opinf

# Define the reduced-order model.

rom = opinf.ROM(

basis=opinf.basis.PODBasis(cumulative_energy=0.9999),

ddt_estimator=opinf.ddt.UniformFiniteDifferencer(t, "ord6"),

model=opinf.models.ContinuousModel(

operators="A",

solver=opinf.lstsq.L2Solver(regularizer=1e-8),

),

)

# Calibrate the reduced-order model to data.

rom.fit(Q)

# Solve the reduced-order model.

with opinf.utils.TimedBlock("Reduced-order solve"):

Q_ROM = rom.predict(q0, t, method="BDF", max_step=dt)

# Compute the relative error of the ROM solution.

opinf.post.frobenius_error(Q, Q_ROM)[1]

Reduced-order solve...done in 0.02 s.

np.float64(0.001041558370145602)

Data Compression#

Our first task is to construct a low-dimensional representation of the FOM state \(\q(t)\in\RR^{n}\), denoted \(\qhat(t)\in\RR^r\). A ROM is a system of equations that acts on the reduced state \(\qhat(t)\). The integer \(r\) is the dimension of the ROM: if \(r \ll n\), we can expect to be able to solve the ROM much faster than we can solve the FOM.

The relationship between \(\q(t)\) and \(\qhat(t)\) helps dictate the structure of the ROM and allows us to compress the state snapshots \(\q_0,\ldots,\q_{k-1}\in\RR^{n}\) to low-dimensional representations \(\qhat_0,\ldots,\qhat_{k-1}\in\RR^{r}\) that are used to calibrate the ROM.

Tools for defining low-dimensional approximations of high-dimensional states are defined in opinf.basis.

For this problem, we use a linear approximation for \(\q(t)\):

The matrix \(\Vr\in\RR^{n\times r}\) is called a basis matrix and its columns are called basis vectors. We typically have \(\Vr\trp\Vr = \I\), i.e., the basis vectors form an orthonormal set. Note that the product \(\Vr\qhat(t)\) is a linear combination of the basis vectors, so \(\q(t)\) can only be approximated well if it is within or near the span of the basis vectors.

We choose \(\Vr\) using proper orthogonal decomposition (POD), which is based on the singular value decomposition (SVD) of samples of \(\q(t)\).

The singular values give some guidance on choosing an appropriate ROM dimension \(r\).

Fast singular value decay is a good sign that a ROM may be successful with this kind of data; if the singular values do not decay quickly, then a large \(r\) may be required to capture the behavior of the system.

Below, we initialize a opinf.basis.PODBasis object with the following criteria for selecting \(r\): choose the smallest \(r\) such that we capture over \(99.9999\%\) of the cumulative energy of the system.

# Initialize a basis.

basis = opinf.basis.PODBasis(cumulative_energy=0.9999)

# Fit the basis (compute Vr) using the snapshot data.

basis.fit(Q)

print(basis)

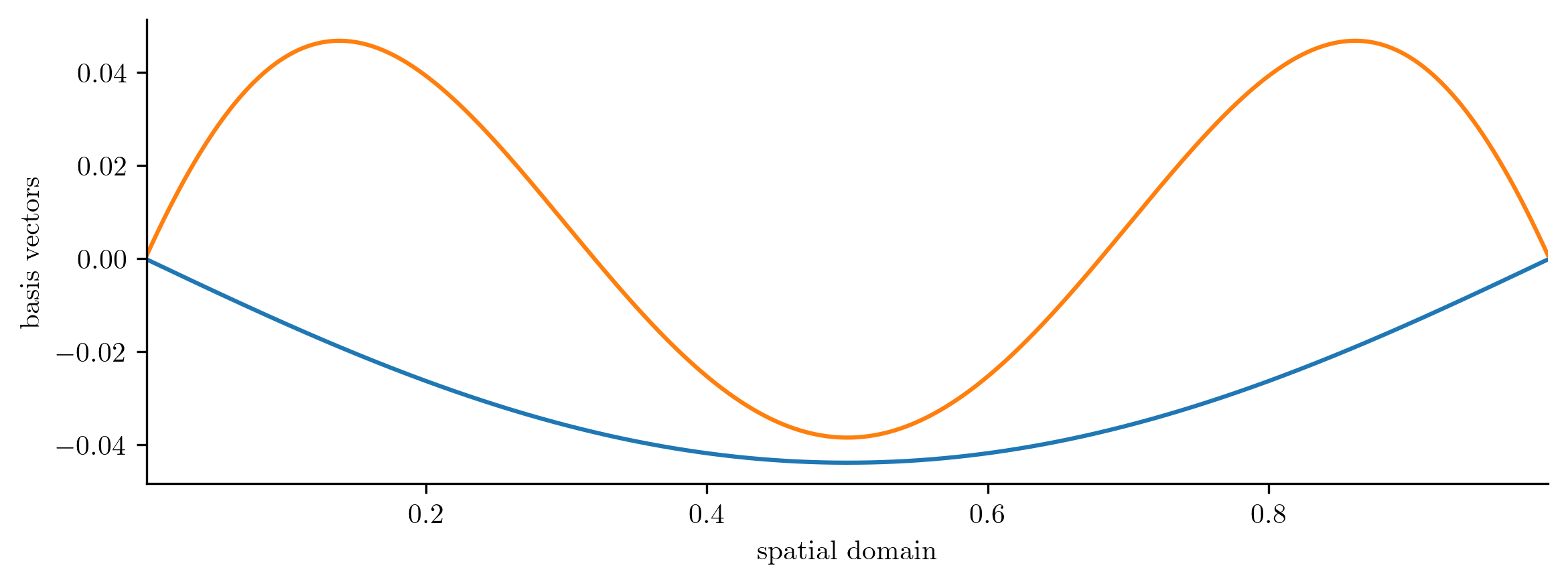

# Visualize the basis vectors.

basis.plot1D(x)

plt.show()

PODBasis

full_state_dimension: 1023

reduced_state_dimension: 2

cumulative energy: 99.999899%

residual energy: 1.0084e-06

401 basis vectors available

SVD solver: scipy.linalg.svd()

Solutions of our eventual ROM are restricted to linear combinations of these two basis vectors.

After the basis is initialized and calibrated, we can use it to compress the state snapshots to an \(r\)-dimensional representation. In this case, we have \(\qhat_j = \Vr\trp\q_j \in \RR^{r}\). These \(\qhat_j\) are data for the ROM state \(\qhat(t)\) at time \(t_j\).

# Compress the state snapshots to the reduced space defined by the basis.

Q_ = basis.compress(Q)

print(f"{Q.shape=}, {Q_.shape=}")

Q.shape=(1023, 401), Q_.shape=(2, 401)

To see how well the state can be represented by a given basis matrix, it is helpful to examine the projection of the state snapshots. For linear state approximations like POD, the projection of \(\q\in\RR^n\) is the vector \(\Vr\Vr\trp\q\in\RR^n\).

basis.projection_error(Q)

np.float64(0.001004178414241919)

Time Derivative Estimation#

In addition to the compressed state snapshots \(\qhat_0,\ldots,\qhat_{k-1}\), OpInf for time-continuous (ODE) models requires data for the time derivatives of the state snapshots, denoted

There are two ways to get such data.

If time derivatives of the original state snapshots are available, they can be compressed to the reduced state space.

Otherwise, the time derivatives may be estimated from the compressed states.

The opinf.ddt module defines tools for estimating time derivatives from state data.

Recall that the FOM in this problem (5) is given by \(\ddt\q(t) = \A\q(t)\).

In this case we have \(\A\), so we can compute \(\dot{\q}_j = \A\q_j\), then set \(\dot{\qhat}_j = \Vr\trp\dot{\q}_j\).

Below, we should how this approach compares with using tools from opinf.ddt.

Since the data \(\q_0,\ldots,\q_{k-1}\) are defined on a uniform time grid, we use opinf.ddt.UniformFiniteDifferencer.

# Compute exact time derivatives using the FOM and compress them.

Qdot_exact = basis.compress(A @ Q)

# Estimate time derivatives using 6th-order finite differences.

ddt_estimator = opinf.ddt.UniformFiniteDifferencer(t, "ord6")

Qdot_ = ddt_estimator.estimate(Q_)[1]

print(f"{Qdot_exact.shape=}\t{Qdot_.shape=}")

Qdot_exact.shape=(2, 401) Qdot_.shape=(2, 401)

# Check that the estimate is close to the true time derivatives.

la.norm(Qdot_exact - Qdot_, ord=np.inf) / la.norm(Qdot_exact, ord=np.inf)

0.0009460414867119675

Specifying the Model Operators#

We now have low-dimensional state and time derivative data. To learn a ROM with OpInf, we must specify the structure of the ROM, which should be motivated by the structure of the FOM and the dimensionality reduction strategy.

The FOM (5) is a linear system of ODEs,

Substituting in the approximation \(\q(t)\approx\Vr\qhat(t)\), we have

Next, left multiply by \(\Vr\trp\) and use the fact that \(\Vr\trp\Vr = \I\) to get the following:

where \(\tilde{\A} = \Vr\trp\A\Vr \in \RR^{r\times r}\). The system (6) is called the intrusive Galerkin ROM corresponding to the FOM and the choice of basis matrix \(\Vr\). The intrusive ROM can only be constructed if \(\A\) is known; with OpInf, we aim to construct a reduced system with the same linear structure as the intrusive ROM, but without using \(\A\) explicitly:

for some \(\Ahat\in\RR^{r\times r}\) inferred from the training data.

We specify this linear structure by initializing an opinf.models.ContinuousModel with the string "A".

model = opinf.models.ContinuousModel("A")

print(model)

ContinuousModel

structure: dq / dt = Aq(t)

state_dimension: None

input_dimension: 0

operators:

LinearOperator

state_dimension: None

entries.shape: None

solver: PlainSolver (not trained)

solver: scipy.linalg.lstsq(cond=None, lapack_driver=None)

Model Constructor Shortcut

The "A" argument in the constructor is a shortcut for a slightly longer statement:

>>> model = opinf.models.ContinuousModel([opinf.operators.LinearOperator()])

The opinf.operators.LinearOperator class represents the \(r \times r\) matrix \(\Ahat\), whose entries will be calibrated via regression.

See opinf.operators for the kinds of terms that OpInf ROMs can contain.

Calibrating Model Operators#

Our task now is to learn the entries of \(\Ahat\) using the compressed state snapshots \(\qhat_0,\ldots,\qhat_{k-1}\) and the corresponding time derivatives \(\dot{\qhat}_0,\ldots,\dot{\qhat}_{k-1}\). OpInf does this through minimizing the residual of the model equation with respect to the data:

where \(\mathcal{R}(\Ahat)\) is a regularization term (more on this later).

The opinf.lstsq module defines tools for solving this problem (or variations on it).

By default, the regression is solved without regularization, i.e., \(\mathcal{R}(\Ahat) = 0\).

The following code compares the OpInf ROM matrix \(\Ahat\) to the intrusive ROM matrix \(\tilde{\A} = \Vr\trp\A\Vr\).

model.fit(states=Q_, ddts=Qdot_exact)

print(model)

ContinuousModel

structure: dq / dt = Aq(t)

state_dimension: 2

input_dimension: 0

operators:

LinearOperator

state_dimension: 2

entries.shape: (2, 2)

solver: PlainSolver

data_matrix: (401, 2)

condition number: 9.0958e+01

lhs_matrix: (2, 401)

solve().shape: (2, 2)

solver: scipy.linalg.lstsq(cond=None, lapack_driver=None)

# Construct the intrusive ROM linear operator.

Vr = basis.entries

A_intrusive = Vr.T @ A @ Vr

# Compare the OpInf ROM linear operator to the intrusive one.

A_opinf = model.operators[0].entries

np.allclose(A_intrusive, A_opinf)

True

In this simple problem, using exact time derivative data \(\Vr\trp\A\Q\) and with zero regularization, OpInf produces the intrusive ROM. However, if estimated time derivative data are used instead, the OpInf ROM differs slightly from the intrusive ROM.

# Construct the OpInf ROM with estimated time derivatives.

model.fit(states=Q_, ddts=Qdot_)

A_opinf = model.operators[0].entries

np.allclose(A_intrusive, A_opinf)

False

# Check the difference between intrusive projection and OpInf.

la.norm(A_intrusive - A_opinf) / la.norm(A_intrusive)

np.float64(0.004709272497110991)

Regularization: Stabilizing the Inference Problem#

Ill-conditioning in the data, errors in the estimation of the time derivatives, or overfitting to the data can result in an \(\Ahat\) that defines an inaccurate or even unstable ROM. Introducing a regularization term promotes solutions that respect both the training data and the physics of the problem. One common option is Tikhonov regularization, which sets \(\mathcal{R}(\Ahat) = \|\lambda\Ahat\|_{F}^{2}\) to penalize the entries of the learned operators.

# Define a solver for the Tikhonov-regularized least-squares problem.

model = opinf.models.ContinuousModel(

"A",

solver=opinf.lstsq.L2Solver(regularizer=1e-2),

)

# Construct the OpInf ROM through regularized least squares.

model.fit(states=Q_, ddts=Qdot_exact)

A_opinf = model.operators[0].entries

# Compare to the intrusive model.

np.allclose(A_intrusive, A_opinf)

False

# Check the difference between intrusive projection and OpInf.

la.norm(A_intrusive - A_opinf) / la.norm(A_intrusive)

np.float64(0.001161286442911637)

With inexact time derivatives or regularization, OpInf differs slightly from the intrusive operator \(\tilde{\A}\). However, we will see that in this example the ROM produced by OpInf is highly accurate. In fact, it is sometimes the case that OpInf outperforms intrusive Galerkin projection.

Regularization Matters

Regularization is important in all but the simplest OpInf problems.

If OpInf produces an unstable ROM, try different values for the regularizer.

See [MHW21] for an example of a principled choice of regularization for a combustion problem.

Solving the Reduced-order Model#

Once the model is calibrated, we may solve the ROM with opinf.models.ContinuousModel.predict(), which wraps scipy.integrate.solve_ivp(). This method takes an initial condition for the model \(\qhat_0 = \Vr\trp\q_0\), the time domain over which to record the solution, and any additional arguments for the integrator.

q0_ = basis.compress(q0) # Compress the initial conditions.

model = opinf.models.ContinuousModel(

"A",

solver=opinf.lstsq.L2Solver(regularizer=1e-8),

).fit(Q_, Qdot_)

Q_ROM_ = model.predict(q0_, t, method="BDF")

print(f"{Q_ROM_.shape=}")

Q_ROM_.shape=(2, 401)

The solution is still in the low-dimensional state space; it can be mapped to the original state space by applying \(\Vr\).

Q_ROM = basis.decompress(Q_ROM_)

print(f"{Q_ROM.shape=}")

Q_ROM.shape=(1023, 401)

Custom ODE Solvers

opinf.models.ContinuousModel.predict() is convenient, but scipy.integrate.solve_ivp() implements a limited repertoire of time integration schemes.

However, the ROM can be simulated by any ODE solver scheme by extracting the inferred operator \(\Ahat\).

If timestepper(A, q0) were a solver for systems of the form \(\ddt\qhat = \Ahat\qhat(t),\ \qhat(0) = \qhat_0\), we could simulate the ROM with the following code.

q0_ = basis.compress(q0) # Compress the initial conditions.

Q_ROM_ = timestepper(model.A_.entries, q0_) # Solve the ROM in the reduced space.

Q_ROM = basis.decompress(Q_ROM_) # Decompress the ROM solutions.

More generally, the method opinf.models.ContinuousModel.rhs() represents the right-hand side of the model, the \(\hat{\mathbf{f}}\) of \(\ddt\qhat(t) = \hat{\mathbf{f}}(t, \qhat(t))\).

General-purpose integrators can therefore be applied to the function opinf.models.ContinuousModel.rhs().

The ROM Class#

Up to this point, we have done the following steps using several package submodules.

opinf.basis: Data compression.opinf.ddt: Time derivative estimation.opinf.models: Specify and calibrate model operators, then integrate the reduced system.

The opinf.ROM class wraps these steps for convenience.

Its constructor takes a initialized basis, time derivative estimator, and model objects.

Then, fit() calibrates the basis, compresses the state data, estimates the time derivatives, and calibrates the model.

Use predict() to compress initial conditions, solve the model, and express the solutions in the original state space.

rom = opinf.ROM(

basis=opinf.basis.PODBasis(cumulative_energy=0.9999),

ddt_estimator=opinf.ddt.UniformFiniteDifferencer(t, "ord6"),

model=opinf.models.ContinuousModel(

operators="A",

solver=opinf.lstsq.L2Solver(regularizer=1e-8),

),

)

print(rom)

ROM

basis: PODBasis

full_state_dimension: None

reduced_state_dimension: None

SVD solver: scipy.linalg.svd()

ddt_estimator: UniformFiniteDifferencer

time_domain: 401 entries in [0.0, 1.0]

dt: 2.5000e-03

scheme: ord6()

model: ContinuousModel

structure: dq / dt = Aq(t)

state_dimension: None

input_dimension: 0

operators:

LinearOperator

state_dimension: None

entries.shape: None

solver: L2Solver (not trained)

regularizer: 1.0000e-08

SVD solver: scipy.linalg.svd(full_matrices=False, lapack_driver='gesdd')

rom.fit(Q)

print(rom)

ROM

basis: PODBasis

full_state_dimension: 1023

reduced_state_dimension: 2

cumulative energy: 99.999899%

residual energy: 1.0084e-06

401 basis vectors available

SVD solver: scipy.linalg.svd()

ddt_estimator: UniformFiniteDifferencer

time_domain: 401 entries in [0.0, 1.0]

dt: 2.5000e-03

scheme: ord6()

model: ContinuousModel

structure: dq / dt = Aq(t)

state_dimension: 2

input_dimension: 0

operators:

LinearOperator

state_dimension: 2

entries.shape: (2, 2)

solver: L2Solver

data_matrix: (401, 2)

condition number: 9.0958e+01

lhs_matrix: (2, 401)

solve().shape: (2, 2)

regularizer: 1.0000e-08

SVD solver: scipy.linalg.svd(full_matrices=False, lapack_driver='gesdd')

Q_ROM_2 = rom.predict(q0, t, method="BDF")

np.all(Q_ROM_2 == Q_ROM)

np.True_

Evaluate ROM Performance#

The quality or usefulness of a ROM depends on its accuracy and its computational efficiency.

ROM Accuracy#

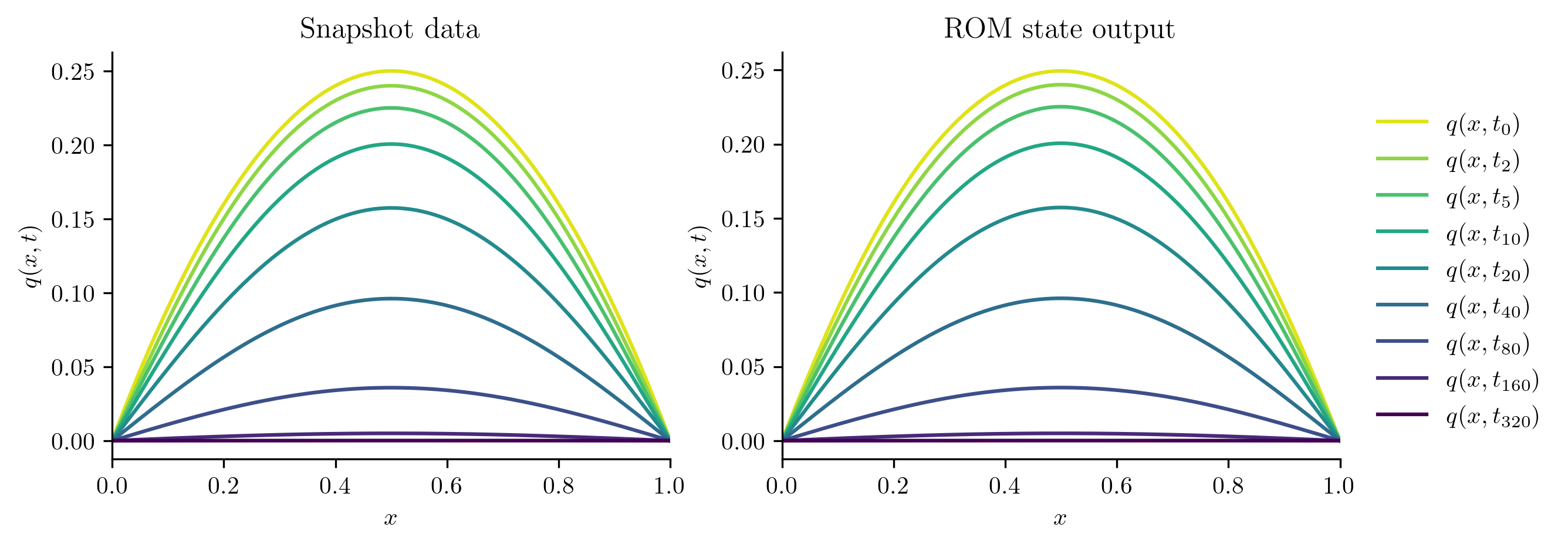

To get a sense of how well the ROM approximates the FOM, we begin by visualizing the simulation output Q_ROM.

It should look similar to the plot of the snapshot data Q.

fig, [ax1, ax2] = plt.subplots(1, 2)

plot_heat_data(Q, "Snapshot data", ax1)

plot_heat_data(Q_ROM, "ROM state output", ax2)

ax1.legend([])

plt.show()

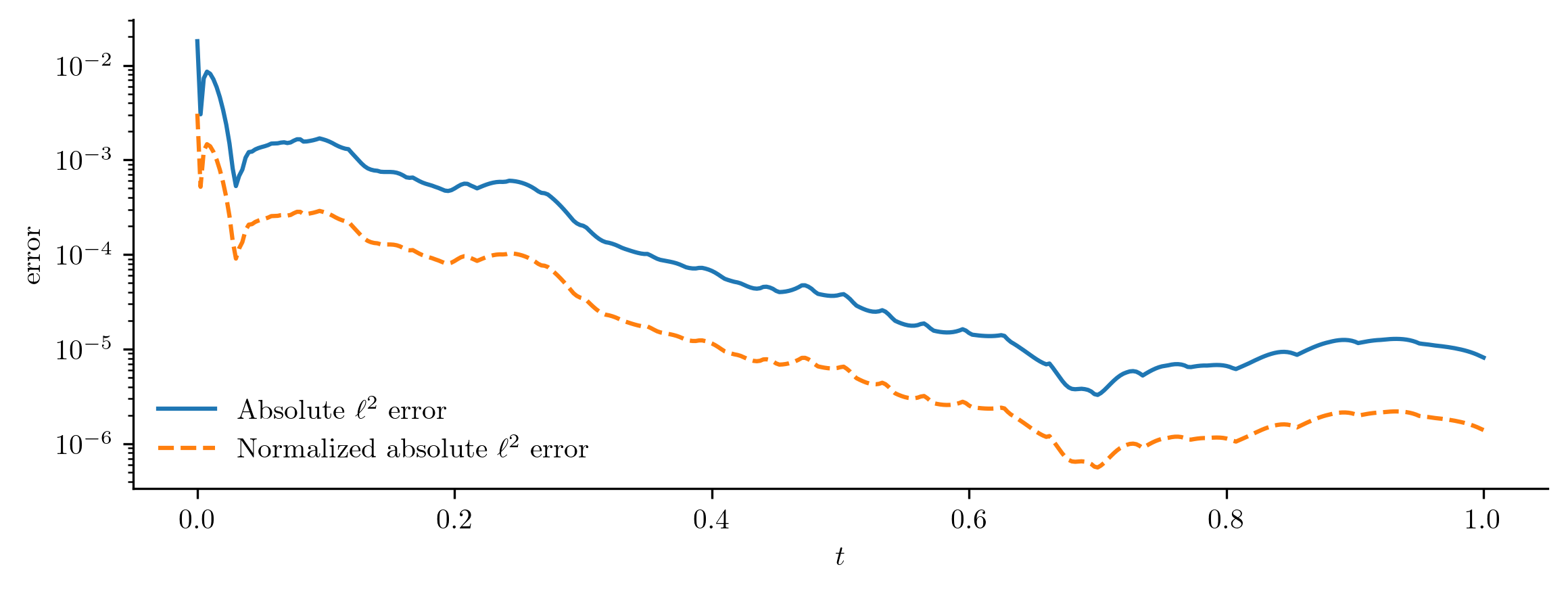

For more detail, we evaluate the \(\ell^2\) error of the ROM output, comparing it to the snapshot set via opinf.post.lp_error().

This calculates the absolute and relative error as a function of time,

Normalized Absolute Error

In this problem, \(\q(t)\to\0\) as \(t\) increases, so a relative error may not be appropriate since \(\|\q(t)\|_{2}\) appears in the denominator.

In situations like this, consider using the normalized absolute error by replacing the denominator with \(\max_{\tau\in[t_0,t_f]}\|\q(t)\|.\)

Set normalize=True in opinf.post.lp_error() to use this error measure instead of the relative error.

abs_l2err, norm_l2err = opinf.post.lp_error(Q, Q_ROM, normalize=True)

fig, ax = plt.subplots(1, 1)

ax.semilogy(t, abs_l2err, "-", label=r"Absolute $\ell^2$ error")

ax.semilogy(t, norm_l2err, "--", label=r"Normalized absolute $\ell^2$ error")

ax.set_xlabel(r"$t$")

ax.set_ylabel("error")

ax.legend(loc="lower left")

plt.show()

In this simple example, the error decreases with time (as solutions get quickly pushed to zero), but this is not the kind of error behavior that should be expected when modeling more complicated phenomena.

We can also get a scalar error measurement by calculating the relative Frobenius norm error with opinf.post.frobenius_error().

abs_froerr, rel_froerr = opinf.post.frobenius_error(Q, Q_ROM)

print(f"Relative Frobenius-norm error: {rel_froerr:%}")

Relative Frobenius-norm error: 0.103165%

In other words, the ROM simulation is within about 0.1% of the snapshot data. Note that this value is very close to the projection error that we calculated earlier.

ROM Computational Speedup#

When a FOM is available, a ROM is only useful if it can be solved much faster than the FOM.

The solution speed can be quickly checked using opinf.utils.TimedBlock.

with opinf.utils.TimedBlock("Full-order solve"):

full_order_solve(q0, t)

Full-order solve...done in 0.29 s.

with opinf.utils.TimedBlock("Reduced-order solve"):

rom.predict(q0, t, method="BDF")

Reduced-order solve...done in 0.01 s.

More precise measurements can be take by aliasing the opinf.utils.TimedBlock and accessing the elapsed attribute.

Below, we solve each model several times to get an average time.

n_trials = 10

with opinf.utils.TimedBlock(f"{n_trials} FOM solves") as fomtime:

for _ in range(n_trials):

full_order_solve(q0, t)

with opinf.utils.TimedBlock(f"{n_trials} ROM solves") as romtime:

for _ in range(n_trials):

rom.predict(q0, t, method="BDF")

print(f"Average FOM time: {fomtime.elapsed / n_trials :.6f} s")

print(f"Average ROM time: {romtime.elapsed / n_trials :.6f} s")

print(f"ROM speedup: {fomtime.elapsed / romtime.elapsed :.4f} times!")

10 FOM solves...done in 2.94 s.

10 ROM solves...done in 0.03 s.

Average FOM time: 0.294117 s

Average ROM time: 0.003406 s

ROM speedup: 86.3501 times!

In this example, the FOM is efficient because it takes advantage of the sparsity of \(\A\in\RR^{n\times n}\). Even so, the ROM achieves a modest speedup due to the smaller size of \(\Ahat\in\RR^{r\times r}\).

Prediction: New Initial Conditions#

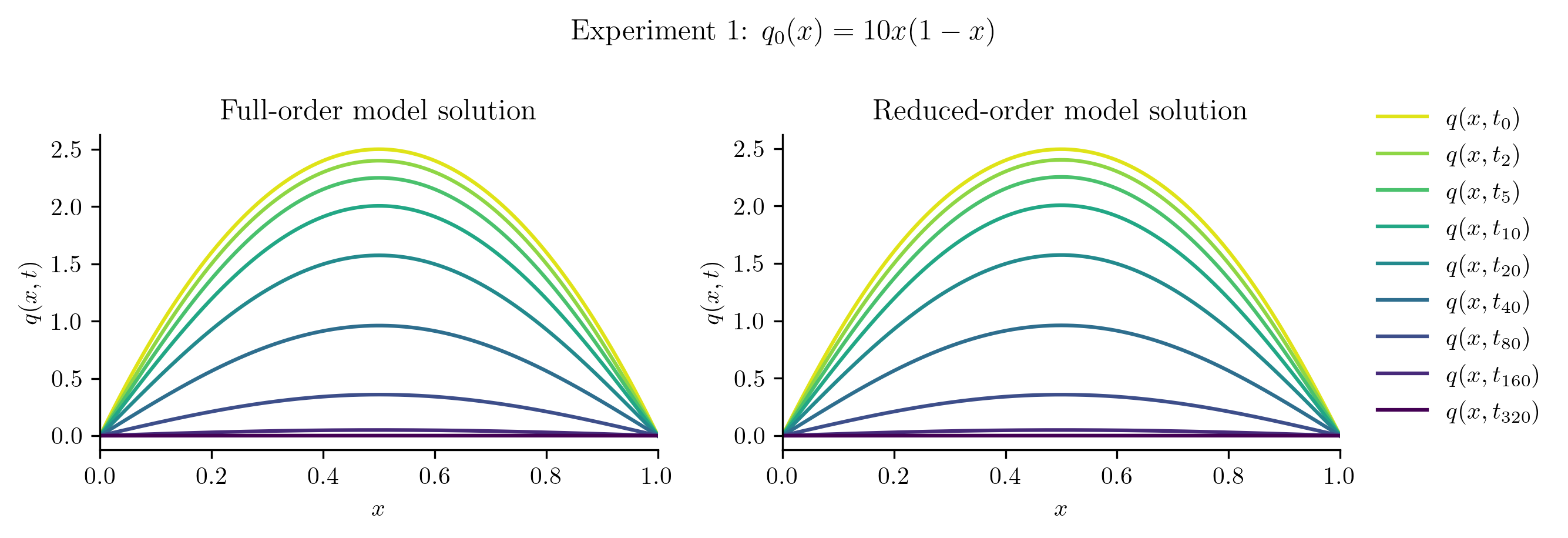

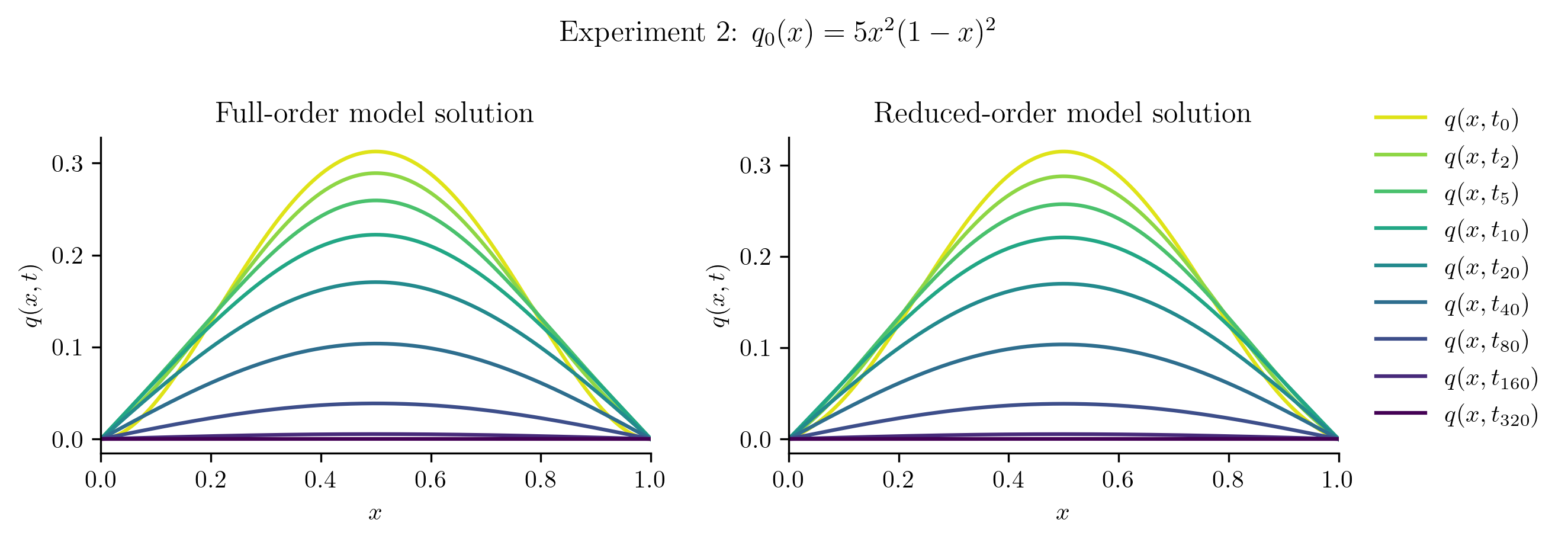

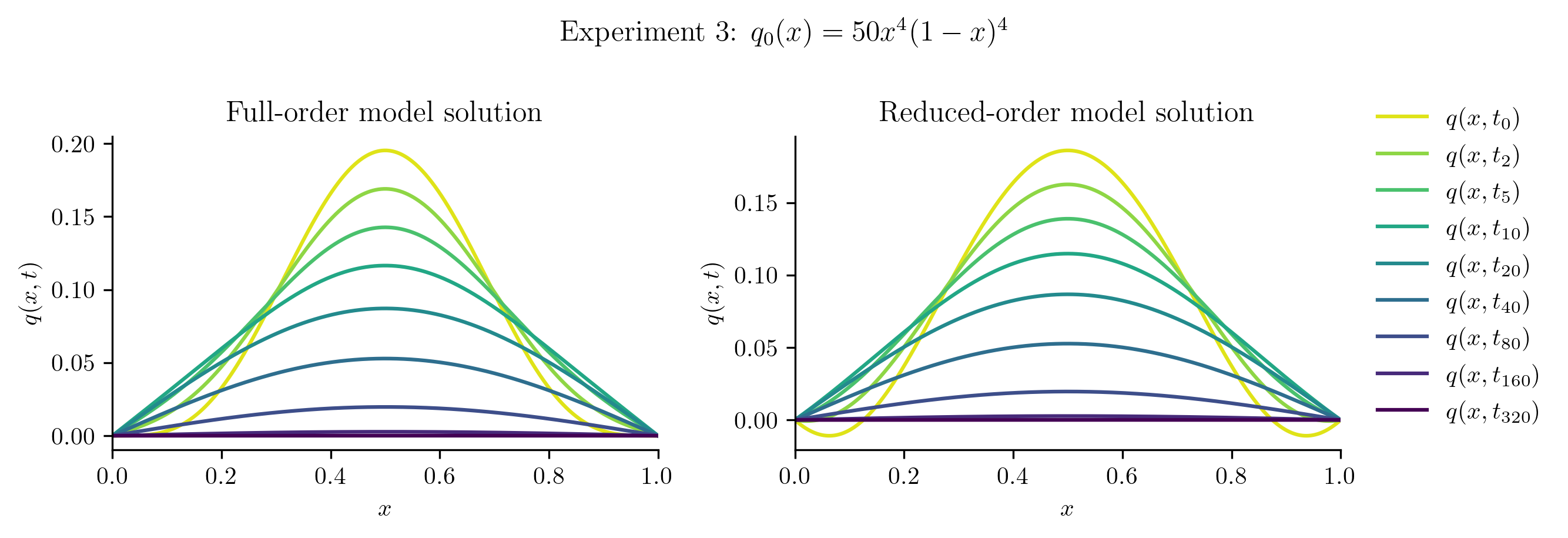

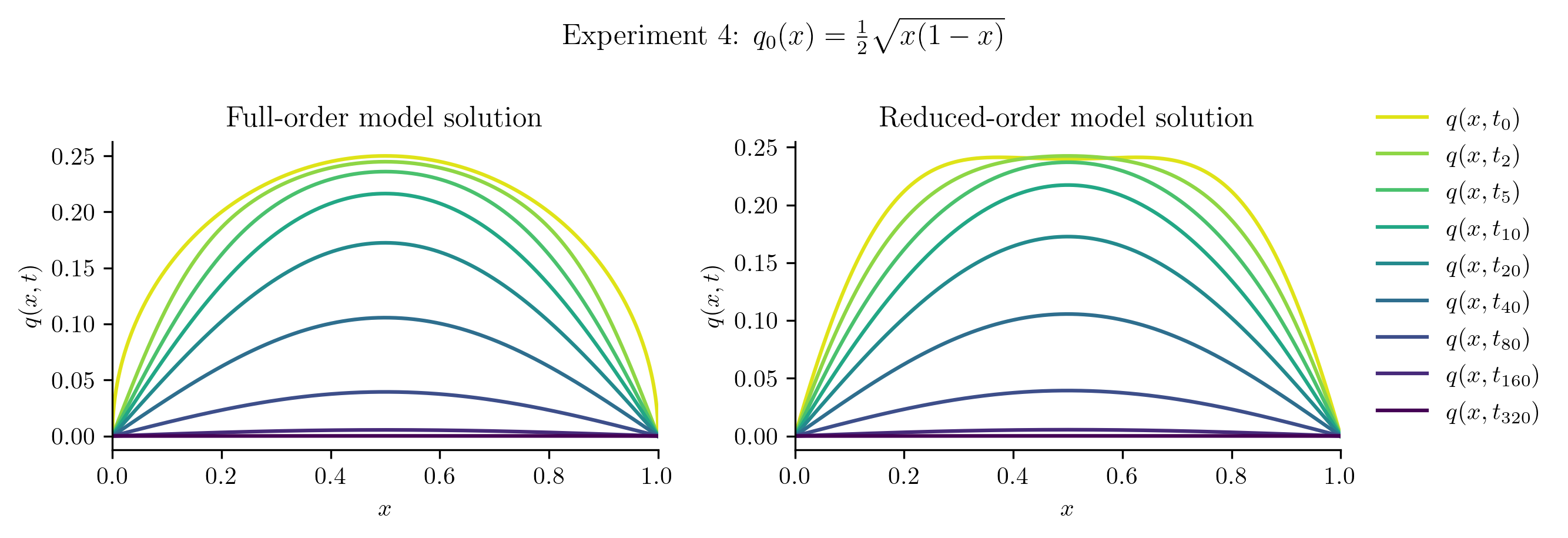

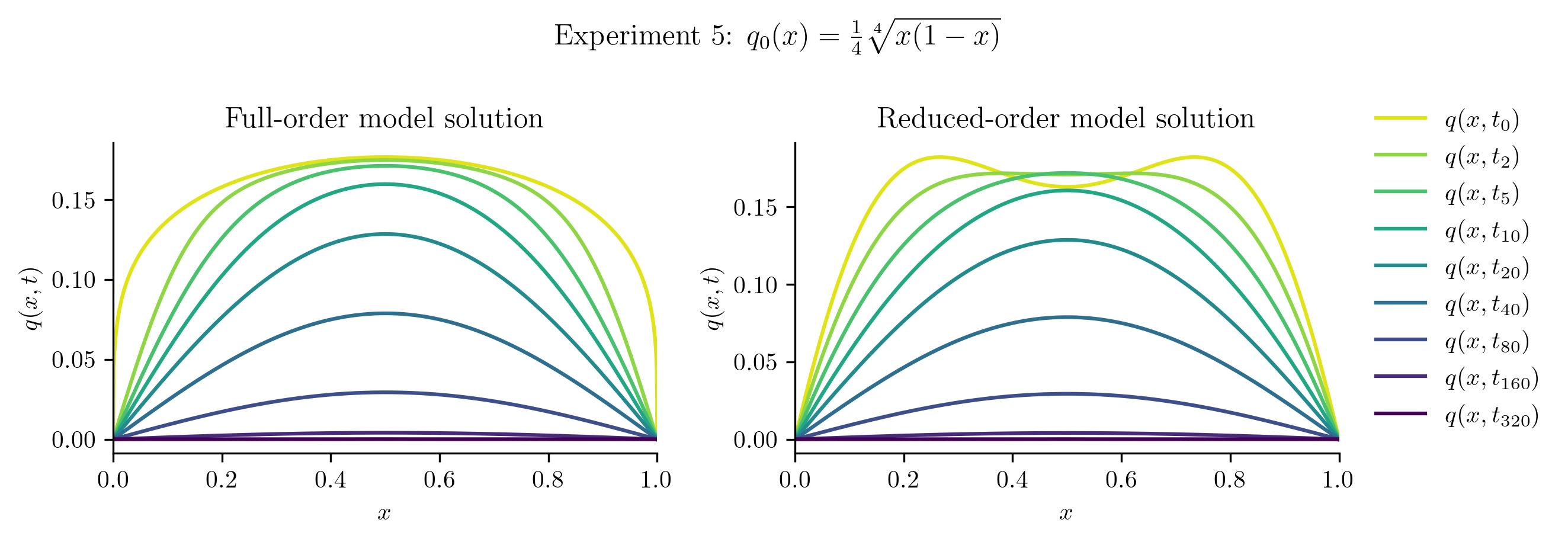

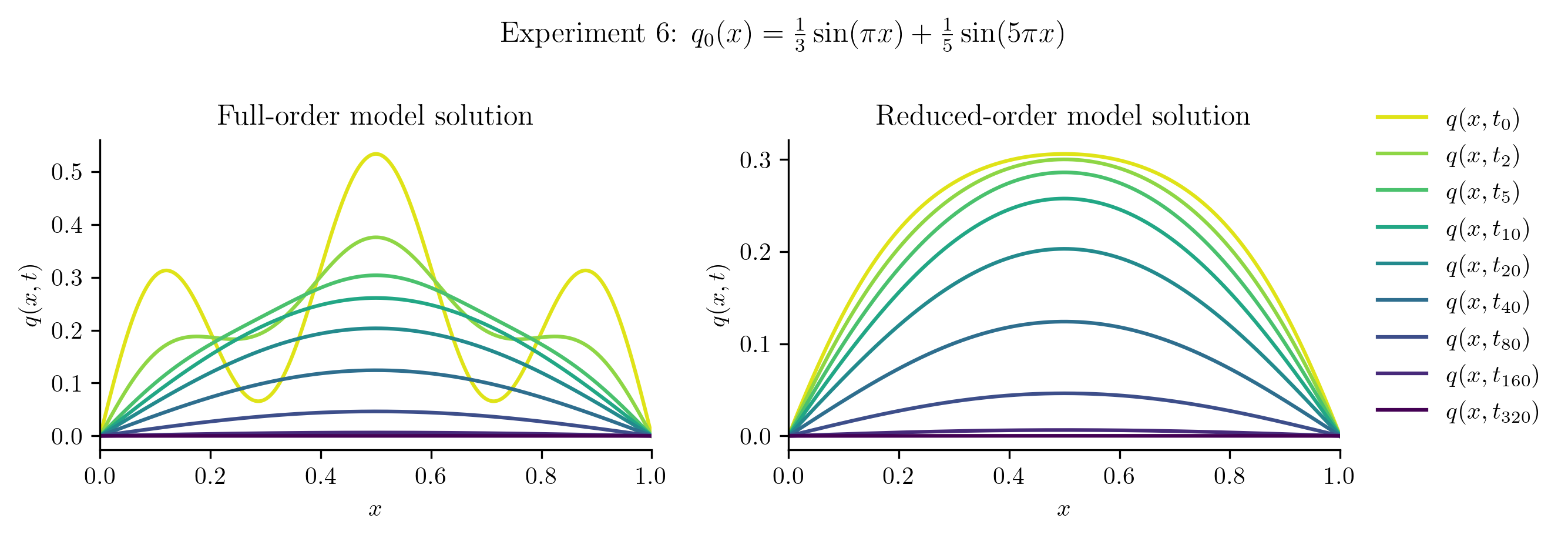

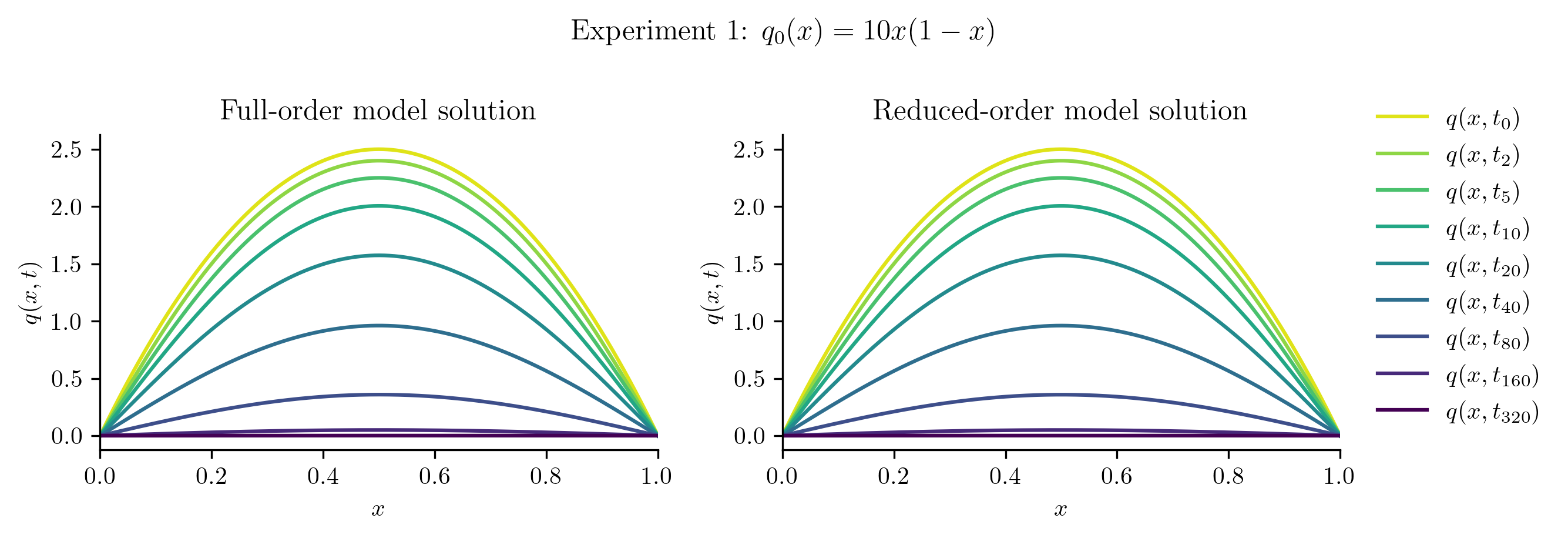

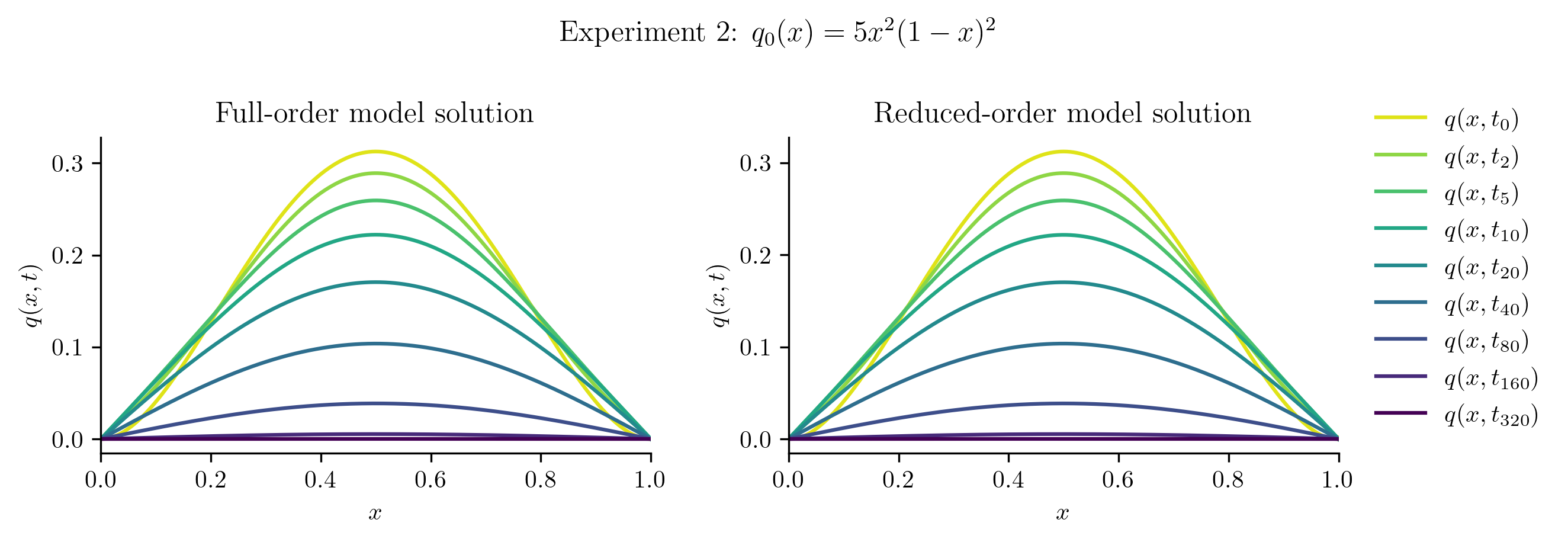

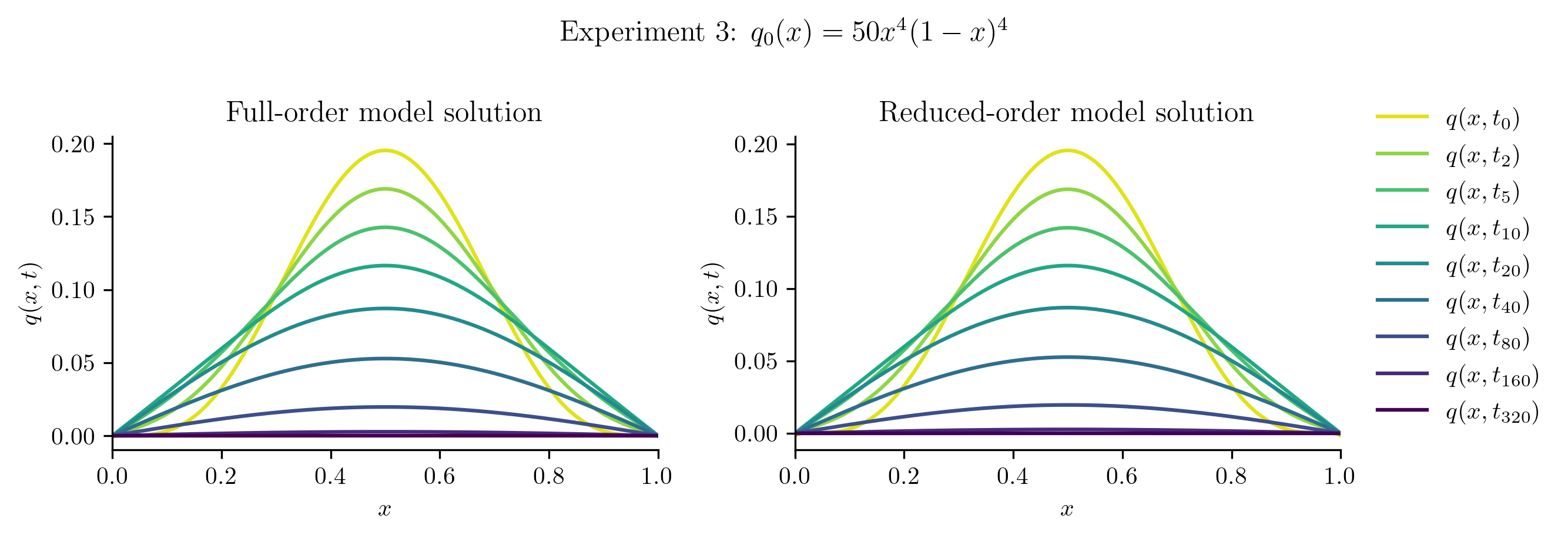

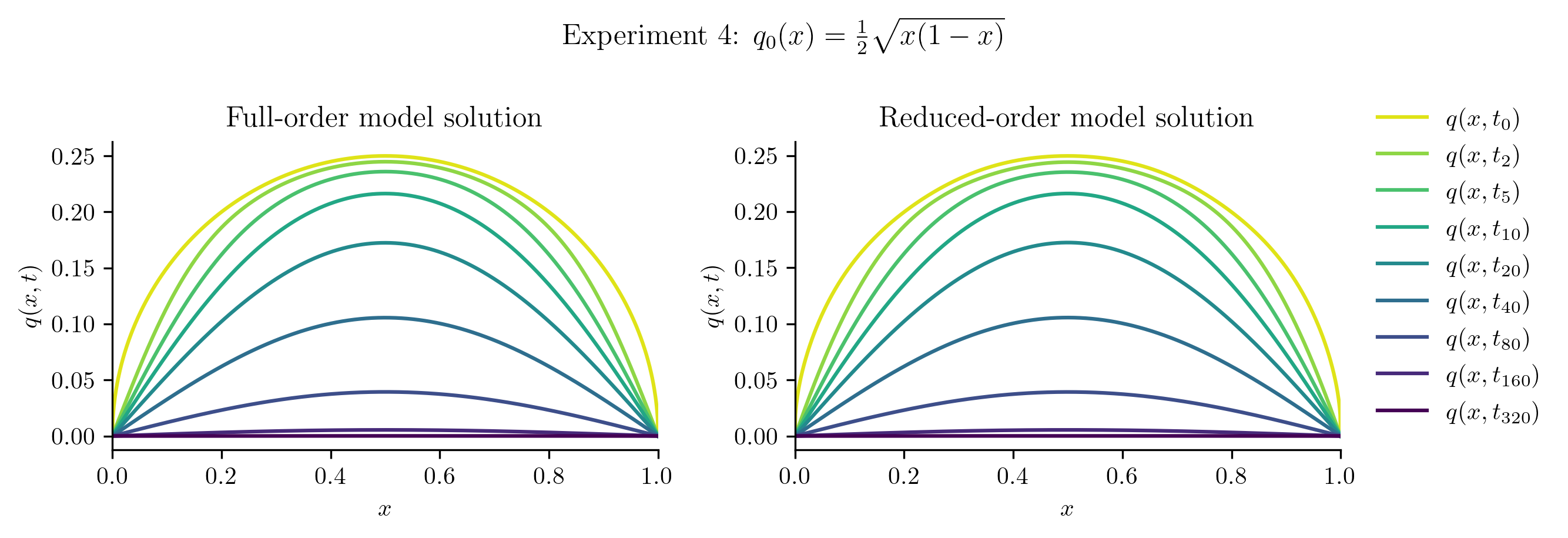

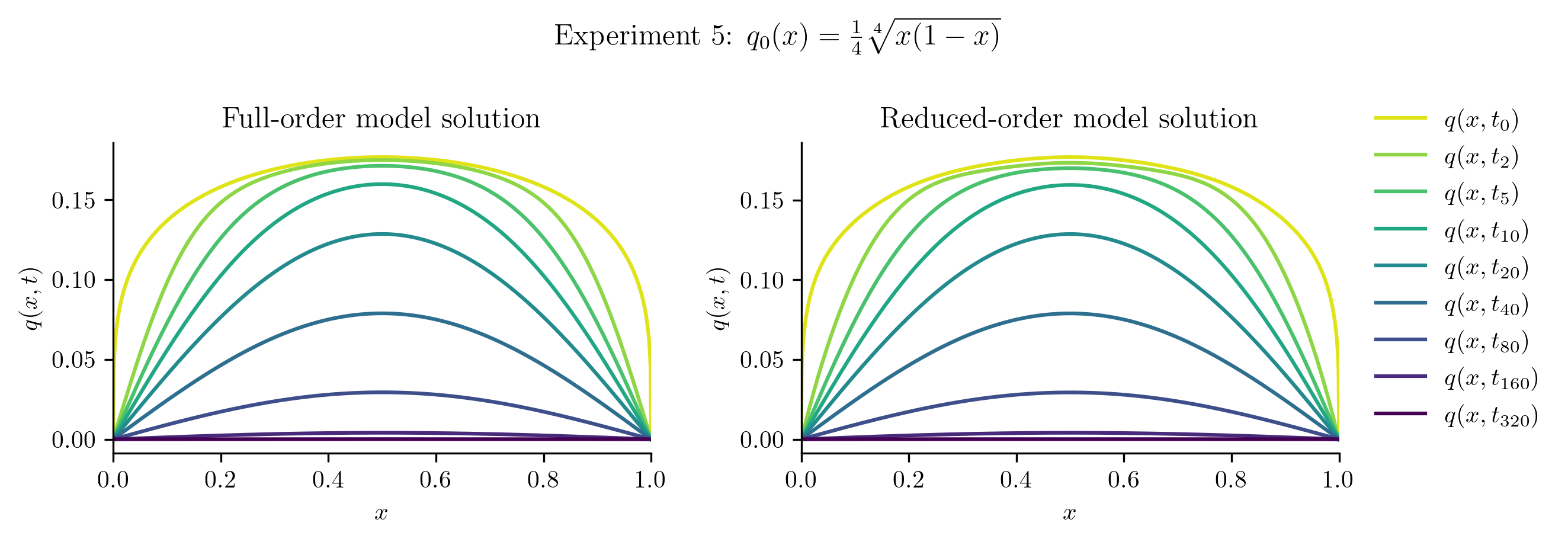

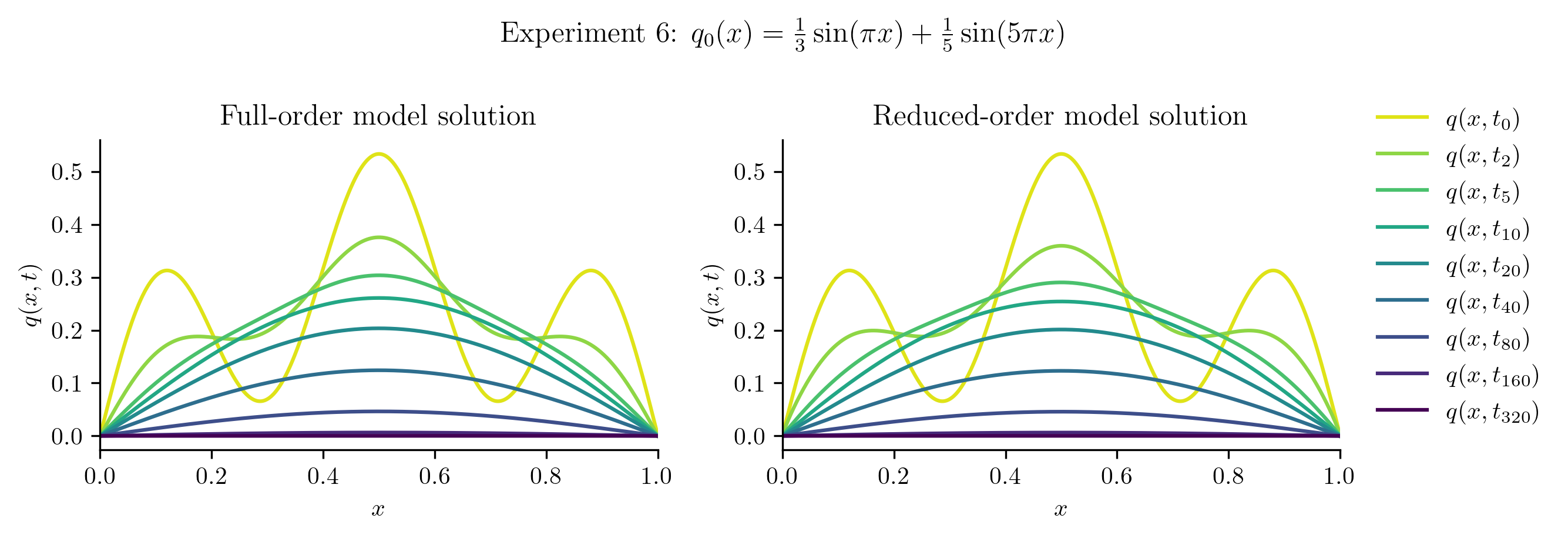

The ROM was trained using only data corresponding to the initial condition \(q_0(x) = x(1 - x).\) We’ll now test the ROM on the following new initial conditions and compare the results to the corresponding FOM solution:

Before we compute the ROM error, we also compute the projection error of the new initial condition,

If this projection error is large, then the new initial condition cannot be represented well within the range of \(\Vr\). This will be apparent in the ROM solutions.

First Attempt#

def test_new_initial_condition(q0, rom, label=None):

"""Compare full-order model and reduced-order model solutions for a given

initial condition.

Parameters

----------

q0 : (n,) ndarray

Heat equation initial conditions q0(x) to be tested.

rom : opinf.ROM

Trained reduced-order model object.

label : str

Description of the initial condition being tested.

"""

# Calculate the projection error of the new initial condition.

rel_projerr = rom.basis.projection_error(q0, relative=True)

# Solve the full-order model (FOM) and the reduced-order model (ROM).

Q_FOM = full_order_solve(q0, t)

Q_ROM = rom.predict(q0, t, method="BDF")

# Plot the FOM and ROM solutions side by side.

fig, [ax1, ax2] = plt.subplots(1, 2)

plot_heat_data(Q_FOM, "Full-order model solution", ax1)

plot_heat_data(Q_ROM, "Reduced-order model solution", ax2)

ax1.legend([])

if label:

fig.suptitle(label, y=1)

fig.tight_layout()

# Calculate the ROM error in the Frobenius norm.

abs_froerr, rel_froerr = opinf.post.frobenius_error(Q_FOM, Q_ROM)

# Report results.

plt.show()

print(

f"Relative projection error of initial condition: {rel_projerr:.2%}",

f"Relative Frobenius-norm ROM error: {rel_froerr:.2%}",

sep="\n",

)

return rel_projerr, rel_froerr

Relative projection error of initial condition: 0.31%

Relative Frobenius-norm ROM error: 0.12%

Relative projection error of initial condition: 1.33%

Relative Frobenius-norm ROM error: 0.51%

Relative projection error of initial condition: 6.80%

Relative Frobenius-norm ROM error: 2.54%

Relative projection error of initial condition: 7.52%

Relative Frobenius-norm ROM error: 1.77%

Relative projection error of initial condition: 15.13%

Relative Frobenius-norm ROM error: 3.61%

Relative projection error of initial condition: 50.86%

Relative Frobenius-norm ROM error: 15.36%

| Relative projection error of initial condition | Relative Frobenius-norm ROM error | |

|---|---|---|

| Experiment 1 | 0.3080% | 0.1225% |

| Experiment 2 | 1.3316% | 0.5132% |

| Experiment 3 | 6.7974% | 2.5361% |

| Experiment 4 | 7.5209% | 1.7673% |

| Experiment 5 | 15.1321% | 3.6147% |

| Experiment 6 | 50.8573% | 15.3624% |

Second Attempt: a Better Basis#

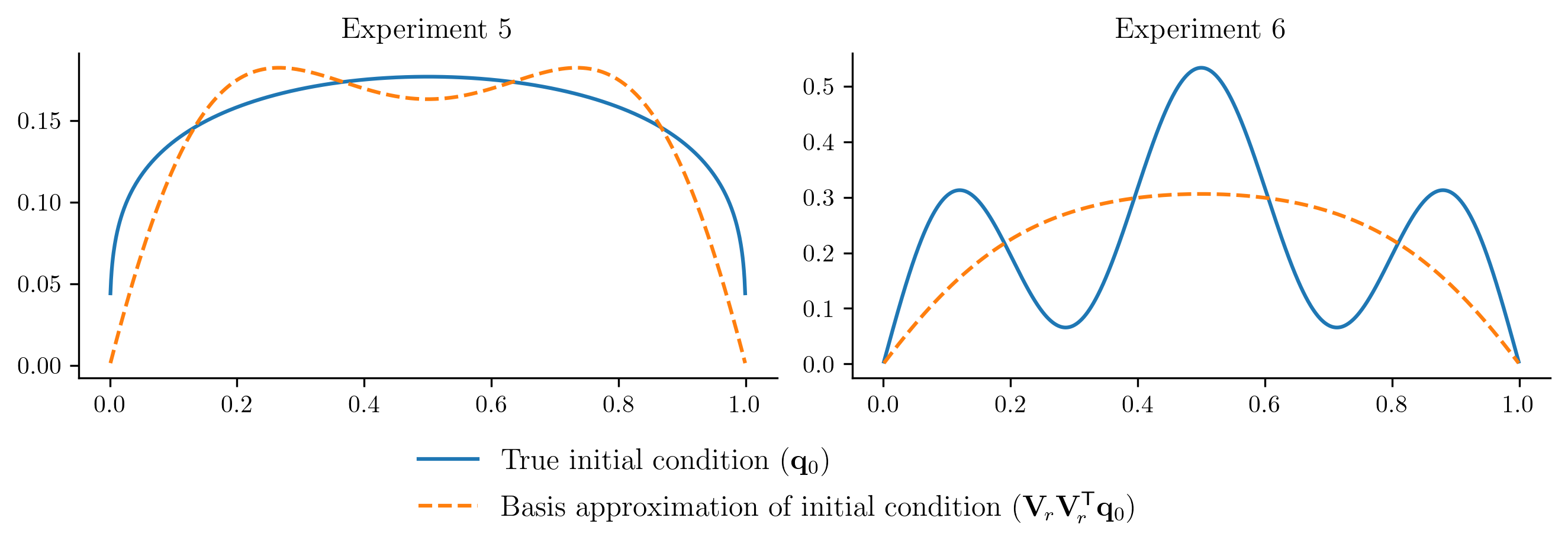

The ROM performs well for \(q_{0}(x) = 10x(1 - x)\), which is unsurprising because this new initial condition is a scalar multiple of the initial condition used to generate the training data. In other cases, the ROM is less successful because the new initial condition cannot be represented well in the span of the basis vectors. For example:

plot_initial_condition_projection(basis)

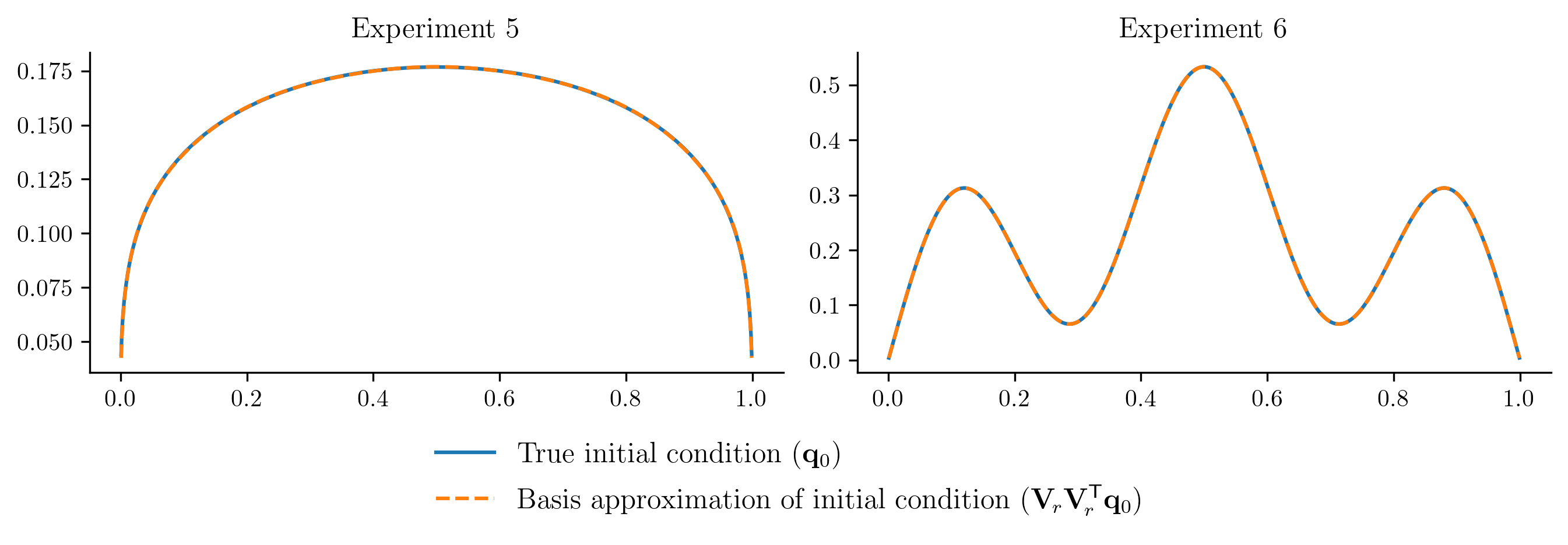

To improve the ROM performace without getting new data from the FOM, we will enrich the basis by

Including the new initial conditions in the basis computation, and

Using a few more basis vectors (we currently have \(r = 2\), let’s use \(r = 5\)).

# Include the new initial conditions in the basis training data.

Q_and_new_q0s = np.column_stack((Q, *q0_new))

newbasis = opinf.basis.PODBasis(num_vectors=5).fit(Q_and_new_q0s)

print(newbasis)

# Plot the projection of the initial conditions in the new basis

plot_initial_condition_projection(newbasis)

PODBasis

full_state_dimension: 1023

reduced_state_dimension: 5

cumulative energy: 99.999993%

residual energy: 6.8109e-08

407 basis vectors available

SVD solver: scipy.linalg.svd()

# Initialize a ROM with the new basis.

rom = opinf.ROM(

basis=newbasis,

ddt_estimator=opinf.ddt.UniformFiniteDifferencer(t, "ord6"),

model=opinf.models.ContinuousModel(

operators="A",

solver=opinf.lstsq.L2Solver(regularizer=1e-8),

),

)

# Use the same training data as before, but do not reset the basis.

_ = rom.fit(Q, fit_basis=False)

print(rom)

ROM

basis: PODBasis

full_state_dimension: 1023

reduced_state_dimension: 5

cumulative energy: 99.999993%

residual energy: 6.8109e-08

407 basis vectors available

SVD solver: scipy.linalg.svd()

ddt_estimator: UniformFiniteDifferencer

time_domain: 401 entries in [0.0, 1.0]

dt: 2.5000e-03

scheme: ord6()

model: ContinuousModel

structure: dq / dt = Aq(t)

state_dimension: 5

input_dimension: 0

operators:

LinearOperator

state_dimension: 5

entries.shape: (5, 5)

solver: L2Solver

data_matrix: (401, 5)

condition number: 7.1660e+04

lhs_matrix: (5, 401)

solve().shape: (5, 5)

regularizer: 1.0000e-08

SVD solver: scipy.linalg.svd(full_matrices=False, lapack_driver='gesdd')

Relative projection error of initial condition: 0.01%

Relative Frobenius-norm ROM error: 0.04%

Relative projection error of initial condition: 0.11%

Relative Frobenius-norm ROM error: 0.08%

Relative projection error of initial condition: 0.17%

Relative Frobenius-norm ROM error: 0.33%

Relative projection error of initial condition: 0.18%

Relative Frobenius-norm ROM error: 0.34%

Relative projection error of initial condition: 0.11%

Relative Frobenius-norm ROM error: 0.95%

Relative projection error of initial condition: 0.00%

Relative Frobenius-norm ROM error: 2.61%

| Relative projection error of initial condition | Relative Frobenius-norm ROM error | |

|---|---|---|

| Experiment 1 | 0.0054% | 0.0406% |

| Experiment 2 | 0.1131% | 0.0826% |

| Experiment 3 | 0.1731% | 0.3323% |

| Experiment 4 | 0.1836% | 0.3389% |

| Experiment 5 | 0.1149% | 0.9499% |

| Experiment 6 | 0.0027% | 2.6084% |

With a more expressive basis, the ROM performance improves significantly.

No Better than the Basis

This example illustrates a fundamental principle of model reduction: the accuracy of the ROM is limited by the accuracy of the underlying low-dimensional approximation, which in this case is \(\q(t) \approx \Vr\qhat(t)\). In other words, a good \(\Vr\) is critical in order for the ROM to be accurate and predictive.